7/ A project of this scale is only possible through team science. A shout out to Ben Balas, Mark Lescroart, Paul MacNeilage, Kamran Binaee, and many others off this platform) This work was supported by NSF.

7/ A project of this scale is only possible through team science. A shout out to @bjbalas @KamranBinaee @neuroMDL @SavvyHalow (and many others off this platform) and wise counsel from @Chris_I_Baker @drfeifei and others. This work was supported by @NSF.

6/ 📊 What can you do with VEDB? Study attention and visual behavior in dynamic, natural environments. Develop and test models of visual perception and navigation. Enhance applications in AR/VR, autonomous systems, and more! 🚗🔍

5/ Insights from VEDB. To preview one example, we can assess the "face diet" of observers in the world. Top row shows face and hand locations in the video, and bottom row shows where faces and hands land relative to gaze.

3/🌍 Why is this important? VEDB bridges the gap between controlled lab studies and real-world visual experience. We can now explore how eye movement and navigation interact in natural settings, leading to more robust models in cognitive science and beyond. 🧠🤖

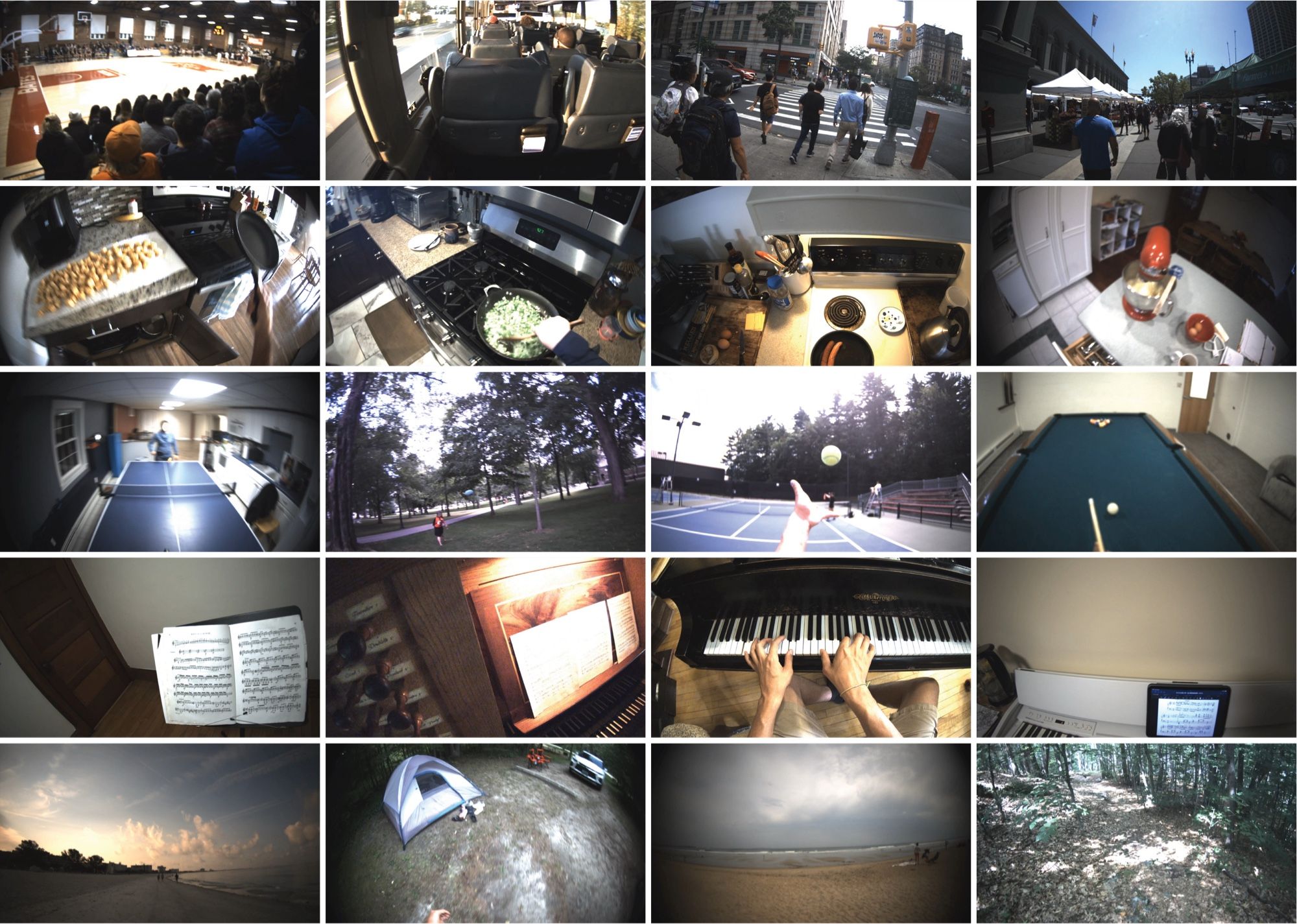

2/🔍 What's inside VEDB? 1️⃣ Egocentric video: a 1st-person perspective on everyday visual experiences. 🎥 2️⃣ Gaze data, providing insights into where people look in real-world environments. 👁️ 3️⃣ Odometry data, capturing movement dynamics as individuals navigate spaces. 🛤️

We're thrilled to announce the release of the Visual Experience Dataset — over 200 hours of synchronized eye movement, odometry, and egocentric video data. This dataset will be a game changer for perception and cognition research. arxiv.org/abs/2404.18934 1/

We introduce the Visual Experience Dataset (VEDB), a compilation of over 240 hours of egocentric video combined with gaze- and head-tracking data that offers an unprecedented view of the visual...

The APPLY lab is at VSS this year! We have an exciting set of presentations starting Sunday, ranging from continuous tracking to reading and eye movements @ankosov.bsky.social@benwolfevision.bsky.social#VSS2024#VSS