Commission discloses disagreements between general-purpose AI providers and other stakeholders The Commission disclosed disagreements between general-purpose model providers and other stakeholders in the first Code of Practice plenary on general-purpose artificial intelligence (GPAI) (1/2)

Questions over positions in drafting GPAI guidelines, Council dubious on telcos consolidation

Welcome to Euractiv’s Tech Brief, your weekly update on all things digital in the EU. You can subscribe to the newsletter here.

So we've got the PAI, the GPAI, and now the PGIAI... everyone following? #AIwww.state.gov/united-state...

Today in New York City, on the margins of the 79th Session of the United Nations General Assembly, Secretary of State Antony Blinken launched the Partnership for Global Inclusivity on AI and announced...

MEPs question appointment of leader for general-purpose AI code of practice Three influential MEPs are questioning how the European Commission is appointing key positions in drafting guidelines for general-purpose AI (GPAI), on the same day that the EU executive is announcing who will (1/2)

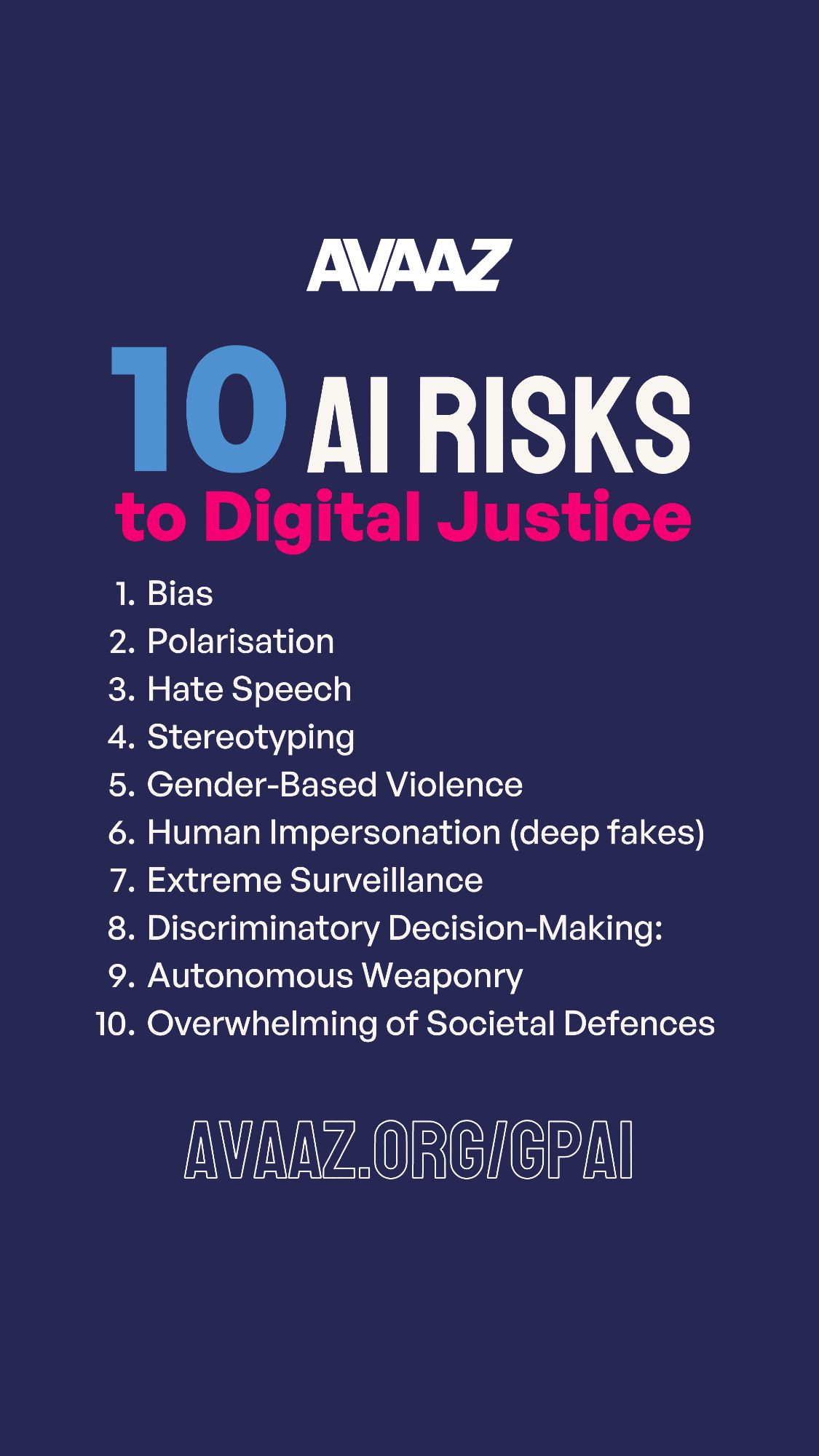

How many of these 10 AI risks did you know? Understand the threats and join us advocating for a General Purpose Artificial Intelligence (GPAI) that upholds ethical standards. Let’s together raise the voices and issues that are in danger of being ignored in AI debates: avaaz.org/gpai

Preparing a response to the @EU_Commission's public consultation on Open Source AI, and every time I try to read this question I feel like I'm having an aneurysm

The first primer focuses on what the EU AI Act calls "general-purpose AI" (GPAI) models—some of which may carry systemic risk. The authors explore options for the U.S. to consider regarding GPAI models in response to the EU's new regulations. www.rand.org/pubs/researc...

As AI applications proliferate worldwide, complex governance debates are taking place. Recent European legislation proposes regulations that will apply to U.S. companies seeking to operate powerful AI...

Interesting paper. As more AI regulations hinge on “risk,” we need to figure out how to quantify AI risk and thresholds for compliance. This paper explains compute thresholds and argues training compute is an imperfect proxy for risk: arxiv.org/pdf/2405.10799