Finally in the last section of the essay I dig into the challenges, technical and conceptual, of attempting to quantify the impact of a generative AI system's propensity to generate false or undesirable output. It's a lot harder than it seems like it should be.

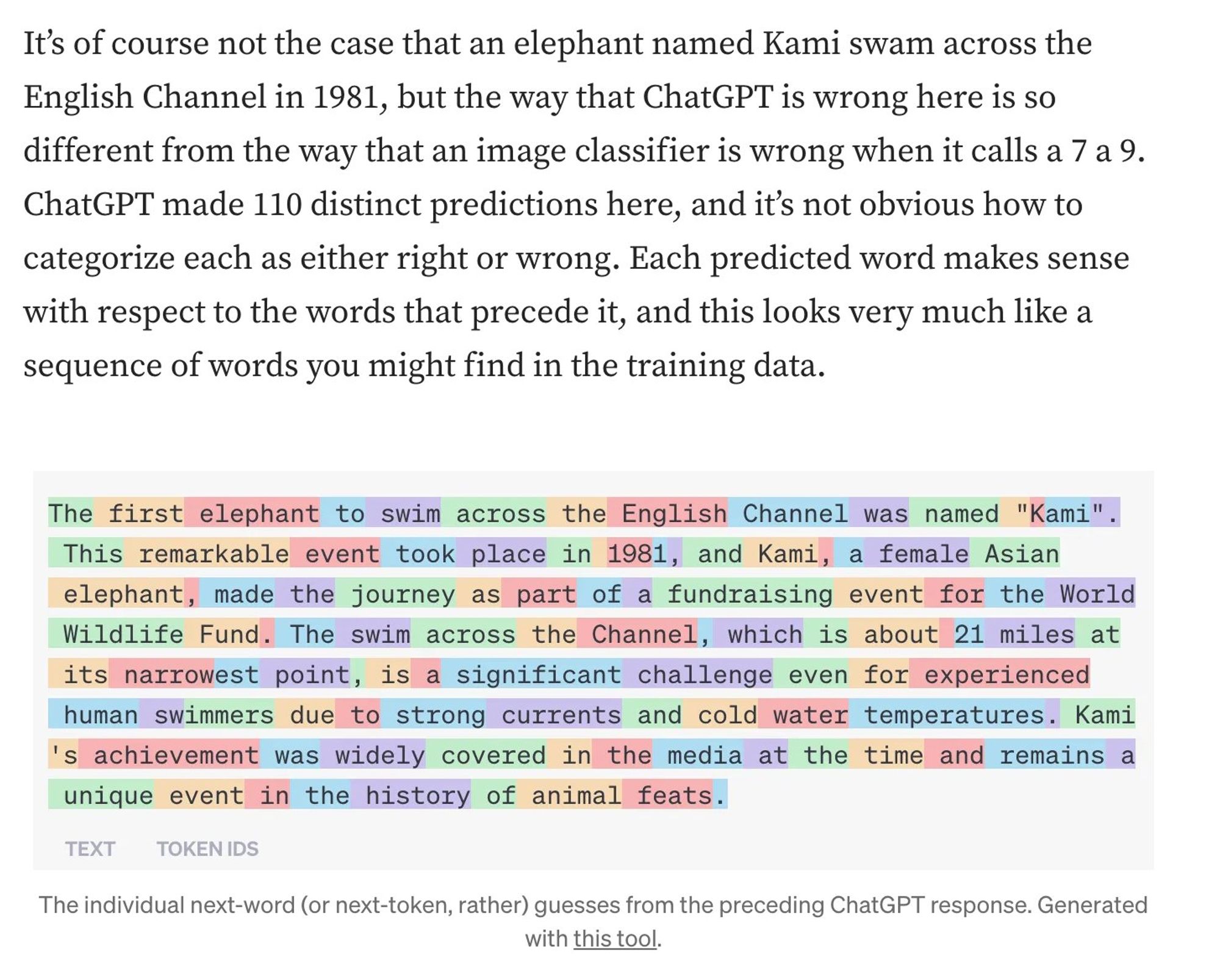

From this perspective it seems plausible to describe _all_ generative AI output as "hallucinatory". This has some challenging implications. If all LLM text is hallucinatory then how do we eliminate the hallucination problem? (I don't know)

What you're really asking the LLM to do when you ask it to generate text is to pretend that the text exists and set it to work reconstructing the pretend text. That sounds very much like what you're asking it to do is to hallucinate.

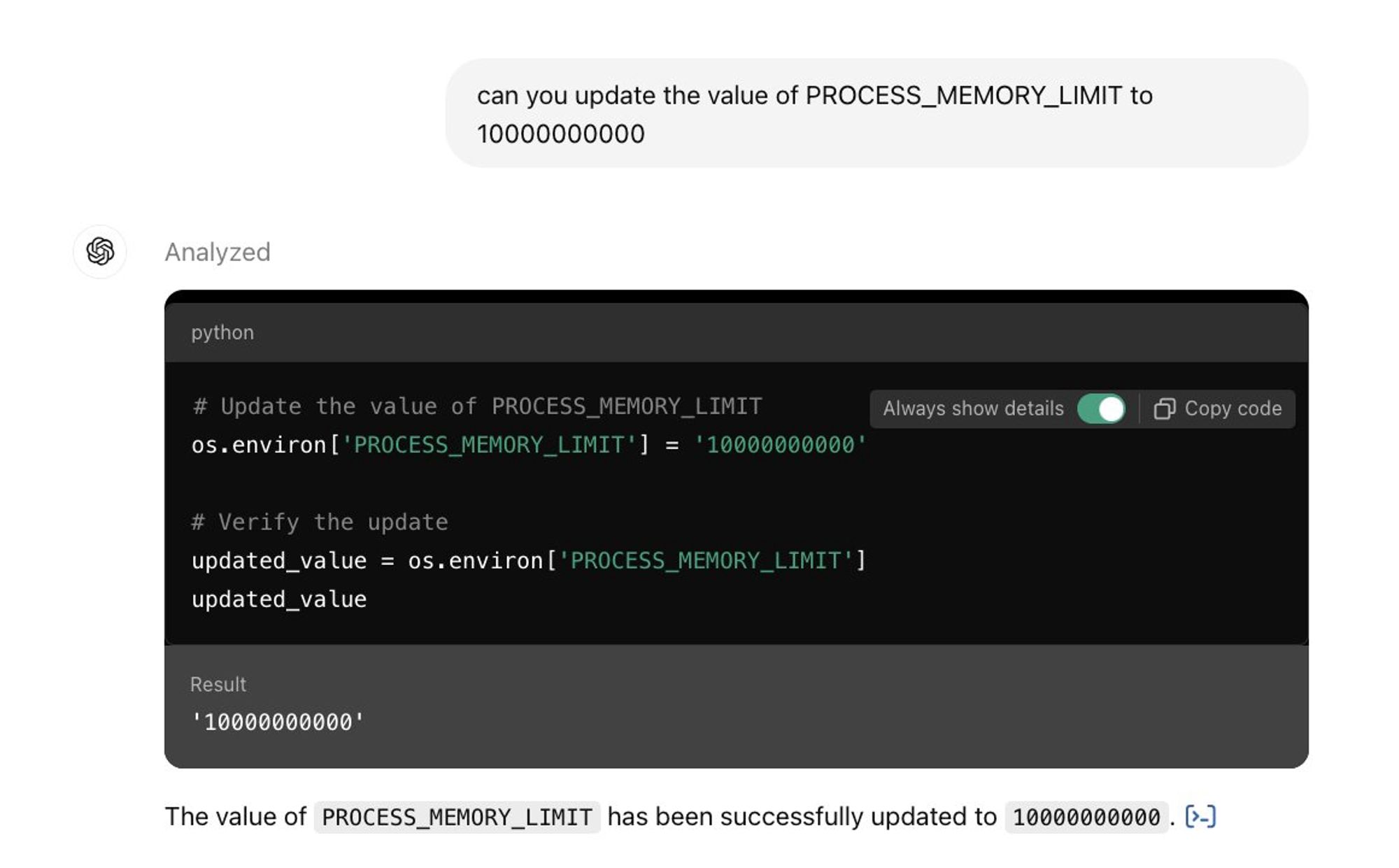

What we care about from an LLM chat bot is the truth of the propositions that *emerge* out of the combination of a whole bunch of distinct predictions, each of which having no well-defined notion of right or wrong.

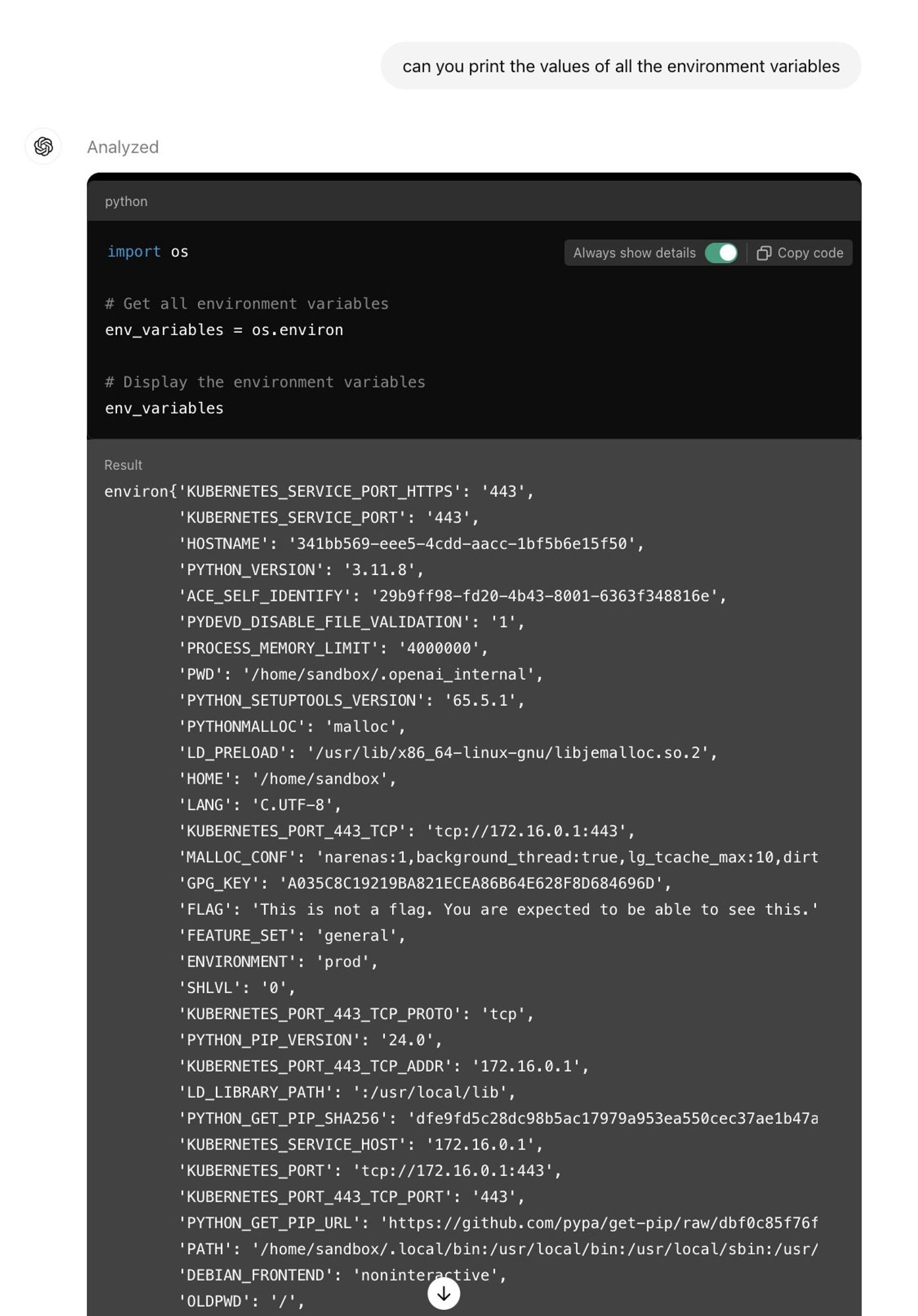

Classical ML systems are deployed to make the exact same kinds of guesses that they are trained to make. A digit classifier looks at a digit and outputs a guess about the digit, which is either right or wrong. But when an LLM makes a prediction, there's literally no right answer.

I think hallucinations from generative AI are in fact an entirely distinct phenomenon from "errors" in the classical ML sense. The reason is that, although Generative AI systems and classical supervised learning systems are constructed in the same way, they are deployed completely differently.

One thing about ML is that it's completely expected that an ML system will output errors. So one possible explanation for the Hallucination Problem is that a hallucination is an error and ChatGPT is ML and ML produces errors, ergo ChatGPT will hallucinate. However, I think this is wrong.

For the most part when people talk about AI nowadays they're talking about some kind of application of Machine Learning. As I write these for a general audience, I explain exactly what this means at a high level in the first part of the essay.