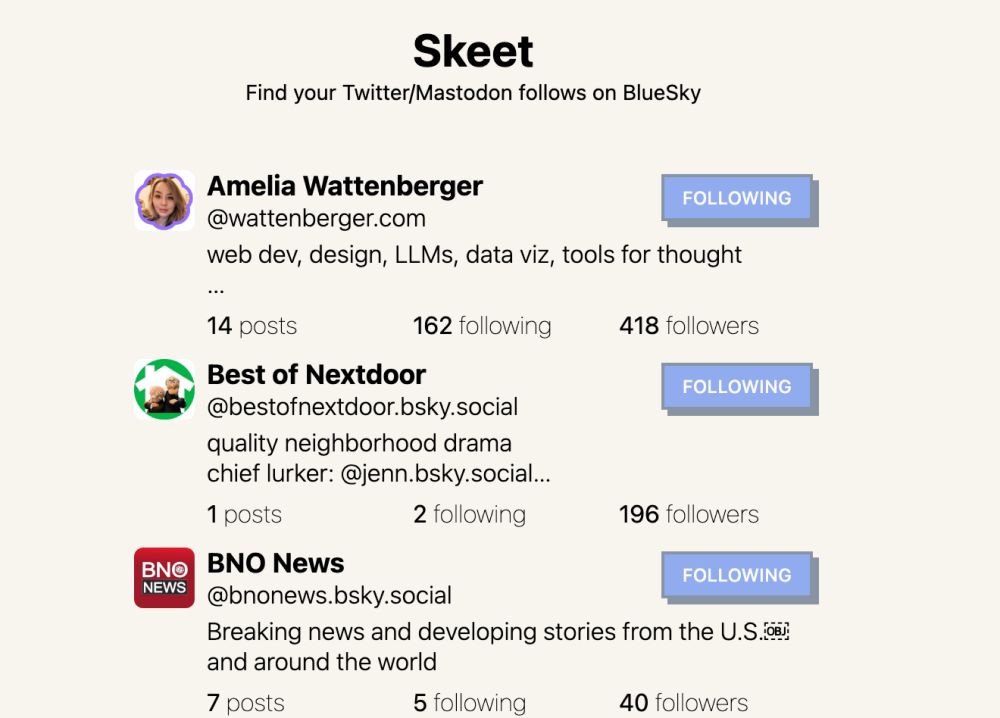

Just discovered this tool that helps you find your Twitter follows over here. Super helpful and should go a long way toward making the Bluesky experience feel more like the version of Twitter that we actually liked. https://skeet.labnotes.org

Find your Twitter/Mastodon follows on BlueSky

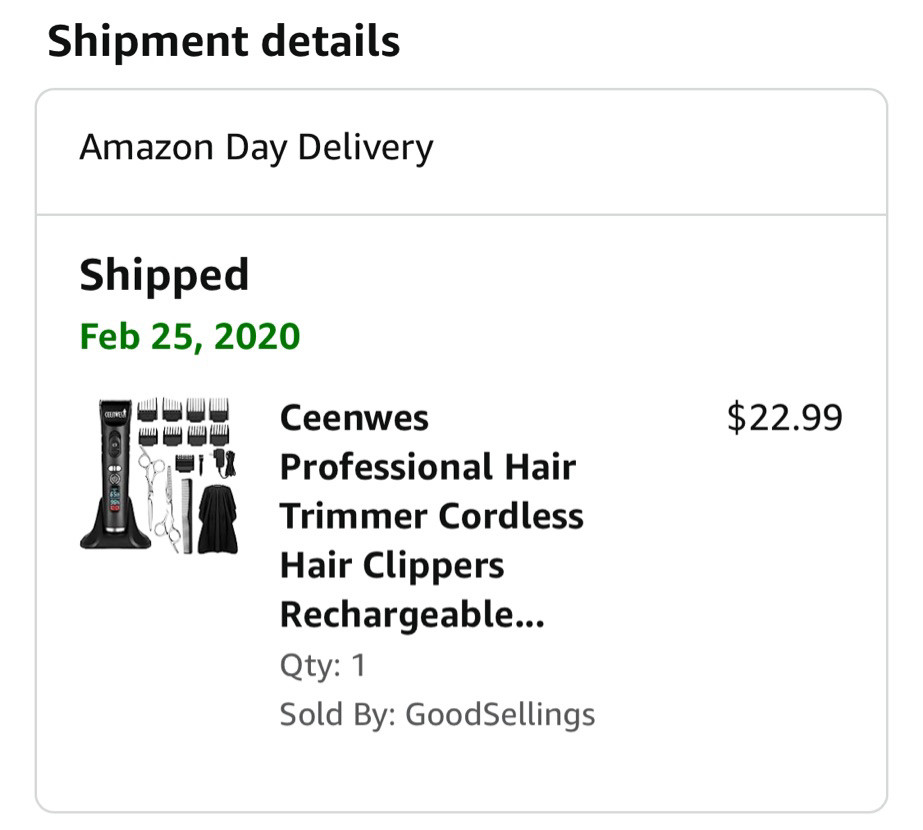

haha same here. after I landed in Seattle in Jan 2020, covid hit and everywhere was closed. I bought this and have been using it since (though I get a “tune-up” whenever I go back to SG 🤣)

6 weeks after this happened, I'm now working on this tech full time and driving the charge for my org 💪 Habit 1 (be proactive) in motion: Slowly but surely, you _can_ earn trust and expand your circle of influence.

What other keys papers should we revisit?

Chinchilla: Smaller models trained on more data* are what you need. *10x more compute should be spent on 3.2x larger model and 3.2x more tokens https://arxiv.org/abs/2203.15556

Scaling laws: Larger models trained on lesser data* are what you you need. *10x more compute should be spent on 5.5x larger model and 1.8x more tokens https://arxiv.org/abs/2001.08361

GPT3: Unsupervised pre-training + a few* examples is all you need. *Up to 5 (Conversational QA) - 50 examples (Winogrande, PhysicalQA, TriviaQA) https://arxiv.org/abs/2005.14165

GPT2: Unsupervised pre-training is all you need?! https://openai.com/research/better-language-models

T5: Encoder-only or decoder-only is NOT all you need, though text-to-text is all you need. (Also, pre-training + finetuning 🚀) https://arxiv.org/abs/1910.10683

BERT: Encoder is all you need. Also, left-to-right language modeling is NOT all you need. (Also, pre-training + finetuning 📈) https://arxiv.org/abs/1810.04805