The program of Crypto 2024 is online, with an invited talk by Karthik Bhargavan on formal verification in crypto! #crypto2024crypto.iacr.org/2024/program...

44th Annual International Cryptology Conference

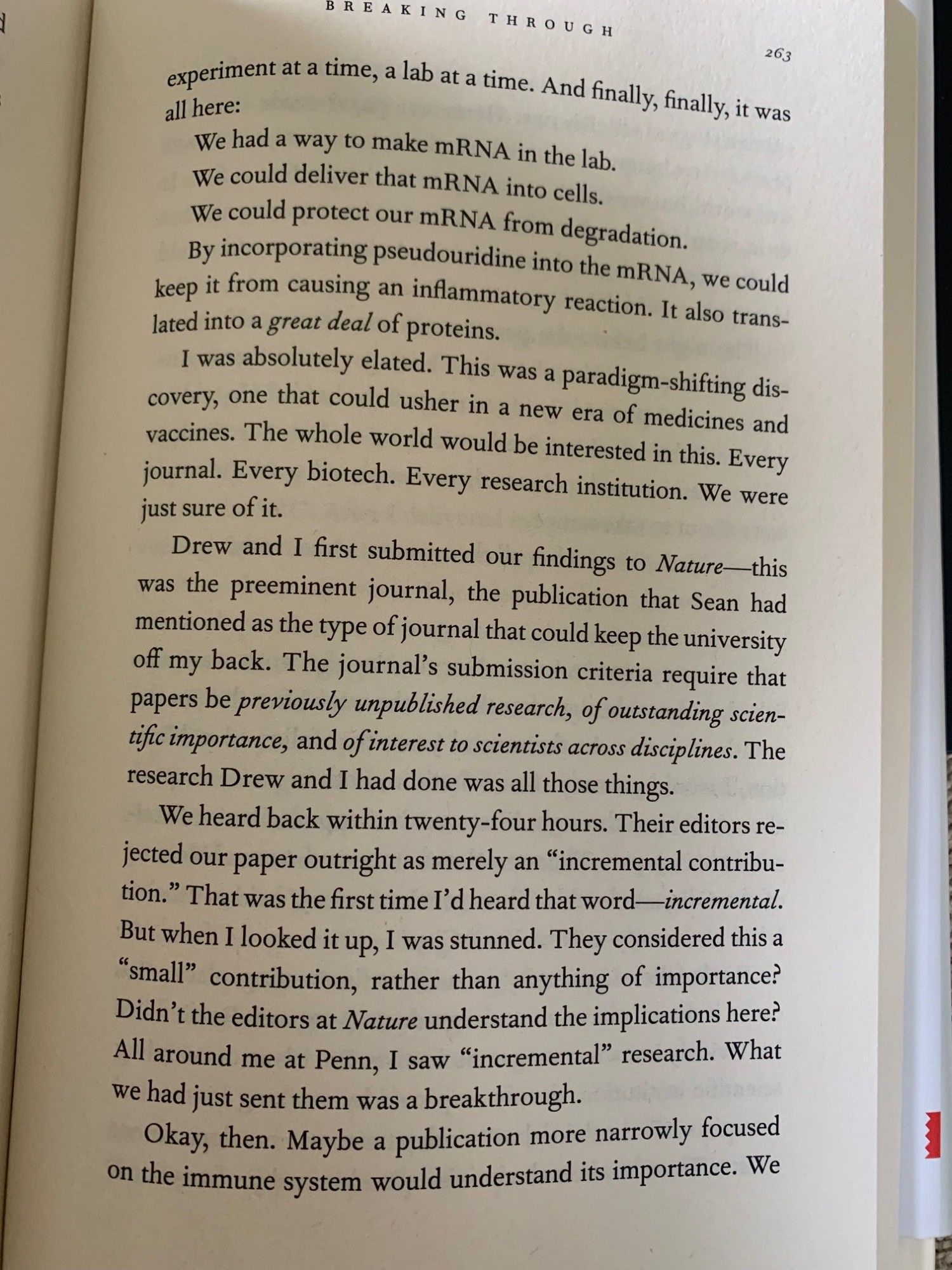

The word "incremental" should not be the kiss of death that it is. All research is incremental: increment by increment, you make progress! Katalin Karikó on how the discovery that brought us the mRNA Covid vaccine was received (from her memoir "Breaking Through," HIGHLY RECOMMEND):

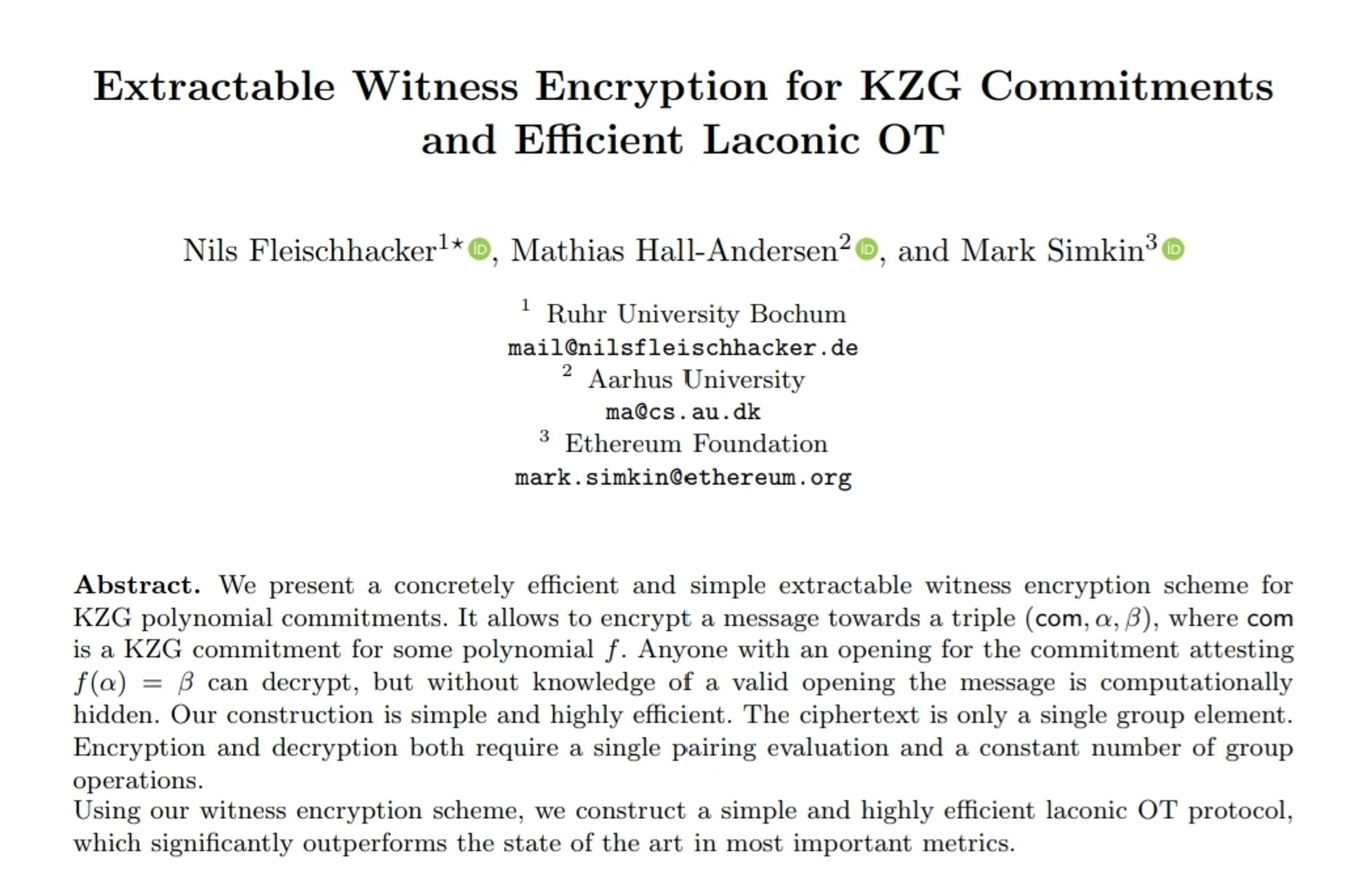

New paper! We construct an extractable witness encryption scheme for KZG commitments. This leads to a surprisingly efficient Laconic OT. ia.cr/2024/264

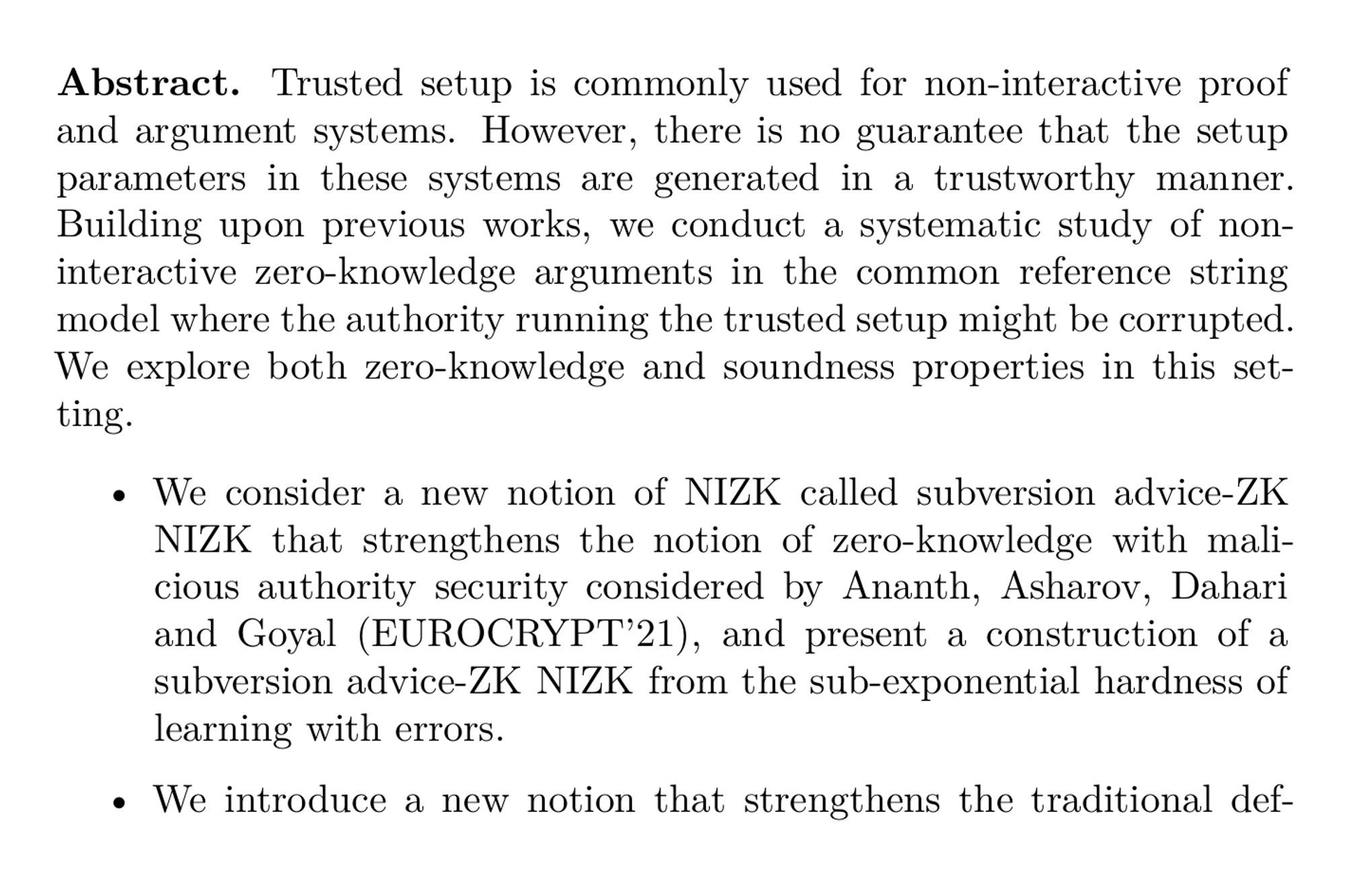

NIZKs with Maliciously Chosen CRS: Subversion Advice-ZK and Accountable Soundness (Prabhanjan Ananth, Gilad Asharov, Vipul Goyal, Hadar Kaner, Pratik Soni, Brent Waters) ia.cr/2024/207

And forgot to say, it was accepted to eurocrypt 2024. With ex students Roberto Parisella and Janno Siim

"A Secure Digital Society without Strong Encryption is Unthinkable" by Bart Preneel www.pymnts.com/cpi_posts/a-...

This concise article delves into the pervasive deployment of encryption across billions of devices, safeguarding our data both during communication over networks and storage within our devices. Next i...

Zero-Knowledge Proofs of Training for Deep Neural Networks (Kasra Abbaszadeh, Christodoulos Pappas, Dimitrios Papadopoulos, Jonathan Katz) ia.cr/2024/162

Adaptively-Sound Succinct Arguments for NP from Indistinguishability Obfuscation (Brent Waters, David J. Wu) ia.cr/2024/165

![Abstract. Lipmaa, Parisella, and Siim [Eurocrypt, 2024] proved the extractability of the KZG polynomial commitment scheme under the falsifiable assumption ARSDH. They also showed that variants of real-world zk-SNARKs like Plonk can be made knowledge-sound in the random oracle model (ROM) under the ARSDH assumption. However, their approach did not consider various batching optimizations, resulting in their variant of Plonk having approximately 3.5 times longer argument. Our contributions are: (1) We prove that several batch-opening protocols for KZG, used in modern zk-SNARKs, have computational special-soundness under the ARSDH assumption. (2) We prove that interactive Plonk has computational special-soundness under the ARSDH assumption and a new falsifiable assumption TriRSDH. We also prove that a minor modification of the interactive Plonk has computational special-soundness under only the ARSDH assumption. The Fiat-Shamir transform can be applied to obtain non-interactive versions, which are secure in the ROM under the same assumptions.](https://cdn.bsky.app/img/feed_fullsize/plain/did:plc:fwa55bujvdrwlwlwgqmmxmuf/bafkreihuwmovukxveqh7ol4ddvzmkxw6fyhrahg34fevdx5mj6eabqarxy@jpeg)