Clearly the brain is much more neuron efficient than today's big ANNs. We need to understand how the brain compresses ANNs functionality into a smaller footprint.

Neuromorphic's value is that processing (neurons) and memory (synapses) are colocated-unlike CPUs and GPUs there is no memory to swap out parameters as you move from layer to layer. It all basically has to fit Multi chip may work but that gets into parallel computing where communication dominates.

The big problem for neuromorphic with ANNs is that today's networks are just so big it is unreasonable to imagine ever seeing them fit on a single chip. We have to figure out how to use the brain's complexity to make networks smaller to fit on a single chip or wafer or whatever.

I think the bigger problem is that data is becoming a barrier to entry in high performing AI research.

How concretely do we know anything? Functionally I think the effect is the same. Without the hippocampus, the brain appears capable of holding transient thoughts, but does not encode them long term. This seems loosely analogous to an LLM session But who knows what is actually happening.

Yeah, we just don't know. And indeed those studies show that motor learning does occur (slowly) sans hippocampus. The brain is dynamical and plastic at all time scales everywhere (unlike ANNs). But those studies illustrate to me that only some of those things stick unless made to.

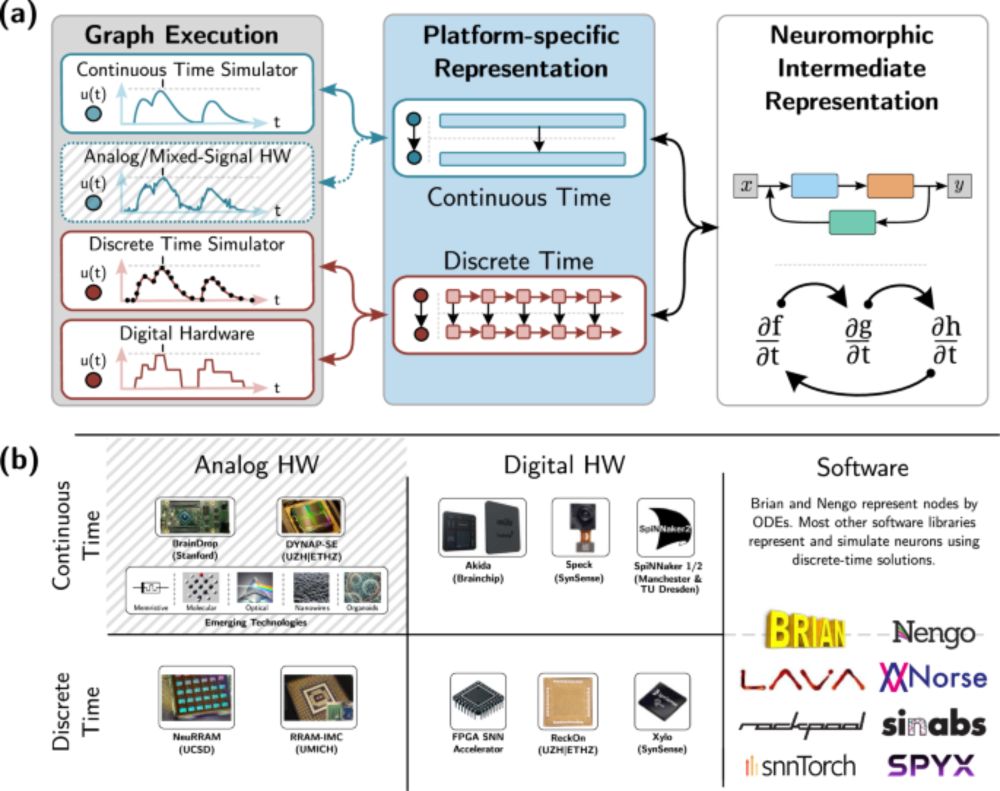

A really great paper just came out in Nature Communications about the Neuromorphic Intermediate Representation, or NIR. Most people here have never tried to program a neuromorphic chip. Well, it is hard, and in large part that is because every chip is different. www.nature.com/articles/s41...

Neuromorphic software and hardware solutions vary widely, challenging interoperability and reproducibility. Here, authors establish a representation for neuromorphic computations in continuous time an...

Isn't the work from hippocampal lesion patients evidence that short term reasoning (presumably context dependent) can occur without long term learning? That's obviously higher level than mechanism, but it always suggested to me cortical processing can acquire information transiently sans plasticity

This is really hard for most neuromorphic and neuroAI researchers because they aren't necessarily neuroscientists. They're trained in different things from anatomy and molecular biology etc. And it is harder to cross that boundary than most people realize. We have to work on that communication

I very much Blake's ideas here, because The Brain 🧠 is so big it is undercontrained as an inspiration, so we need to zoom in to make any real inroads. The problem I've experienced in neuromorphic and I see in NeuroAI is that these fields want to abstract from the biophysics and anatomy very quickly