For what it's worth a great deal of why LLMs confabulate is that they don't have robust memories. It's not that they "predict the next token", that's basically an IQ test (e.g. Raven's Progressive Matrices) and any cognitive process can be framed that way. It's that they basically have dementia.

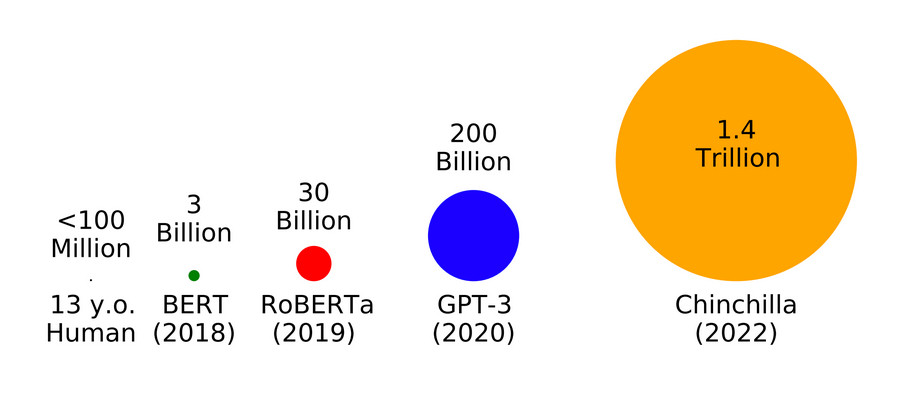

Interesting. Based on my conversations with BabyLM authors (babylm.github.io/index.html), my feeling was that LLMs (at least trained on text) were like 1000x more data hungry than people. So far I haven't seen compelling models trained on human-sized datasets. Any LMs you know similar to this ViT?

That's kind of the same thing. Humans don't just "predict the next token", they have context. LLMs don't - they just crunch the numbers. It may be possible to layer different levels of analysis to try and simulate context, but that hasn't happened yet afaik.

One of my colleagues (Spyridon Samothrakis, not on here (yet?)) has a hypothesis that “catastrophic forgetting” is *the* problem across AI – in transfer in reinforcement learning, in LLMs, etc. But it’s a pretty speculative claim so not sure how to show that exactly.