JR

José Ramón Paño

@joserrapa.bsky.social

MD, ID, AMS, SALUD, HCUZ, IISA, AI, ES, EU, ESCMID, EIS, CMI-CMIComms, SEIMC

20 followers80 following9 posts

Reposted by José Ramón Paño![At least one human referee will then review each recommendation, said Christopher Sewell, director of the Nevada Department of Employment, Training, and Rehabilitation (DETR). If the referee agrees with the recommendation, they will sign and issue the decision. If they don’t agree, the referee will revise the document and DETR will investigate the discrepancy.

“There’s no AI [written decisions] that are going out without having human interaction and that human review,” Sewell said. “We can get decisions out quicker so that it actually helps the claimant.”](https://cdn.bsky.app/img/feed_fullsize/plain/did:plc:tbqqvyv6pjjww44glrmycaxl/bafkreic3ickkep265qybpqc2suf665gigvgqofpk4qobeqpzq7dmadgdvu@jpeg)

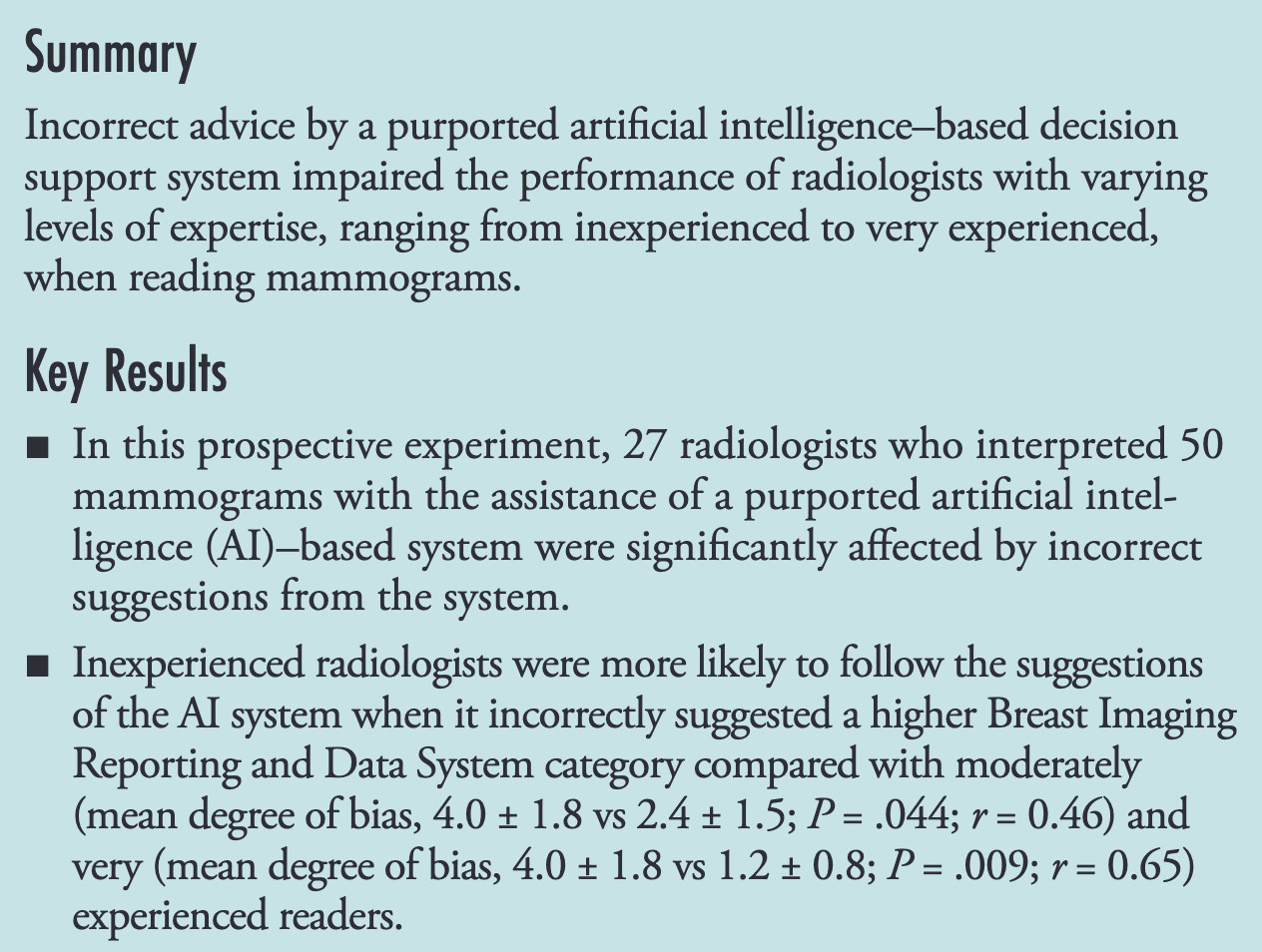

Having humans "review" the decisions doesn't help much when humans are known to be heavily swayed by wrong answers from an AI. For example, even highly trained radiologists reading mammograms are heavily biased by wrong answers from an AI assistant.

![At least one human referee will then review each recommendation, said Christopher Sewell, director of the Nevada Department of Employment, Training, and Rehabilitation (DETR). If the referee agrees with the recommendation, they will sign and issue the decision. If they don’t agree, the referee will revise the document and DETR will investigate the discrepancy.

“There’s no AI [written decisions] that are going out without having human interaction and that human review,” Sewell said. “We can get decisions out quicker so that it actually helps the claimant.”](https://cdn.bsky.app/img/feed_fullsize/plain/did:plc:tbqqvyv6pjjww44glrmycaxl/bafkreic3ickkep265qybpqc2suf665gigvgqofpk4qobeqpzq7dmadgdvu@jpeg)

JR

José Ramón Paño

@joserrapa.bsky.social

MD, ID, AMS, SALUD, HCUZ, IISA, AI, ES, EU, ESCMID, EIS, CMI-CMIComms, SEIMC

20 followers80 following9 posts