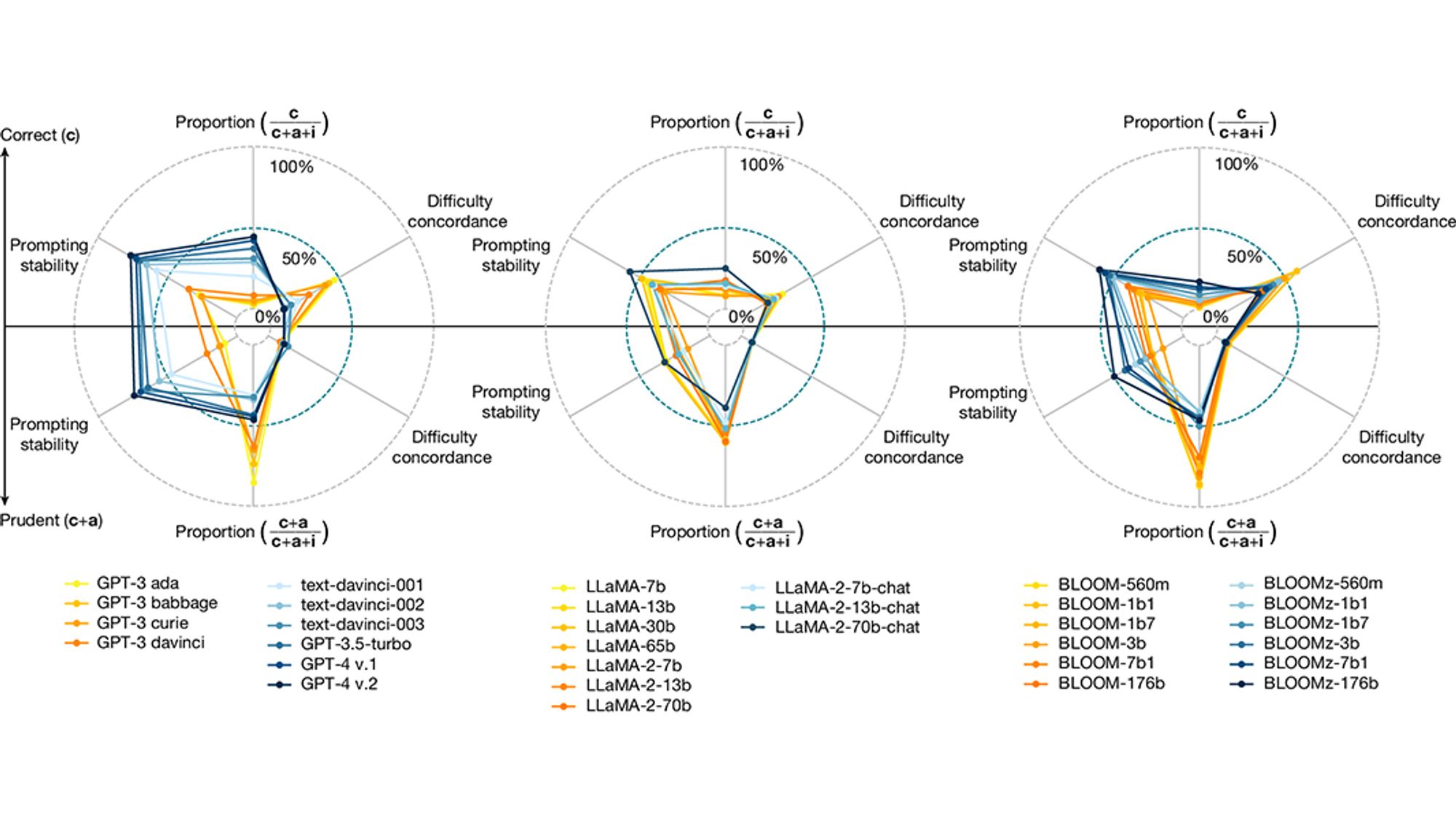

Scaling up and shaping up large language models increased their tendency to provide sensible yet incorrect answers at difficulty levels humans cannot supervise, highlighting the need for a shift in AI design towards reliability, according to a paper in Nature. go.nature.com/4eCAnis 🧪

The question is, are they actually going to make any efforts toward reliability of output if humans can’t fact-check it anyway?

you can’t “shift” LLMs “towards reliability.” that’s like trying to “shift” a fisher price pull-along toy to a super car. they have superficial similarities but aren’t even in the same family of things. LLMs cannot be made to return “facts” only things that sound similar to things they’ve ingested

Or, and hear me out here: there’s no need for this kind of AI and we shouldn’t keep going down this that’s boiling our oceans just so a couple companies and keep claiming stock growth

Reliability. Imagine that.

"a shift towards reliability" how tf am i the one living below the poverty line when an entire industry can peddle billion dollar bullshit where the fact it doesnt work is a feature not a bug