100% It's also noteworthy that the decision to not release any details about the training data or model architecture allows them to avoid citing the work in that space which has been disproportionately done by non-profit and academic researchers.

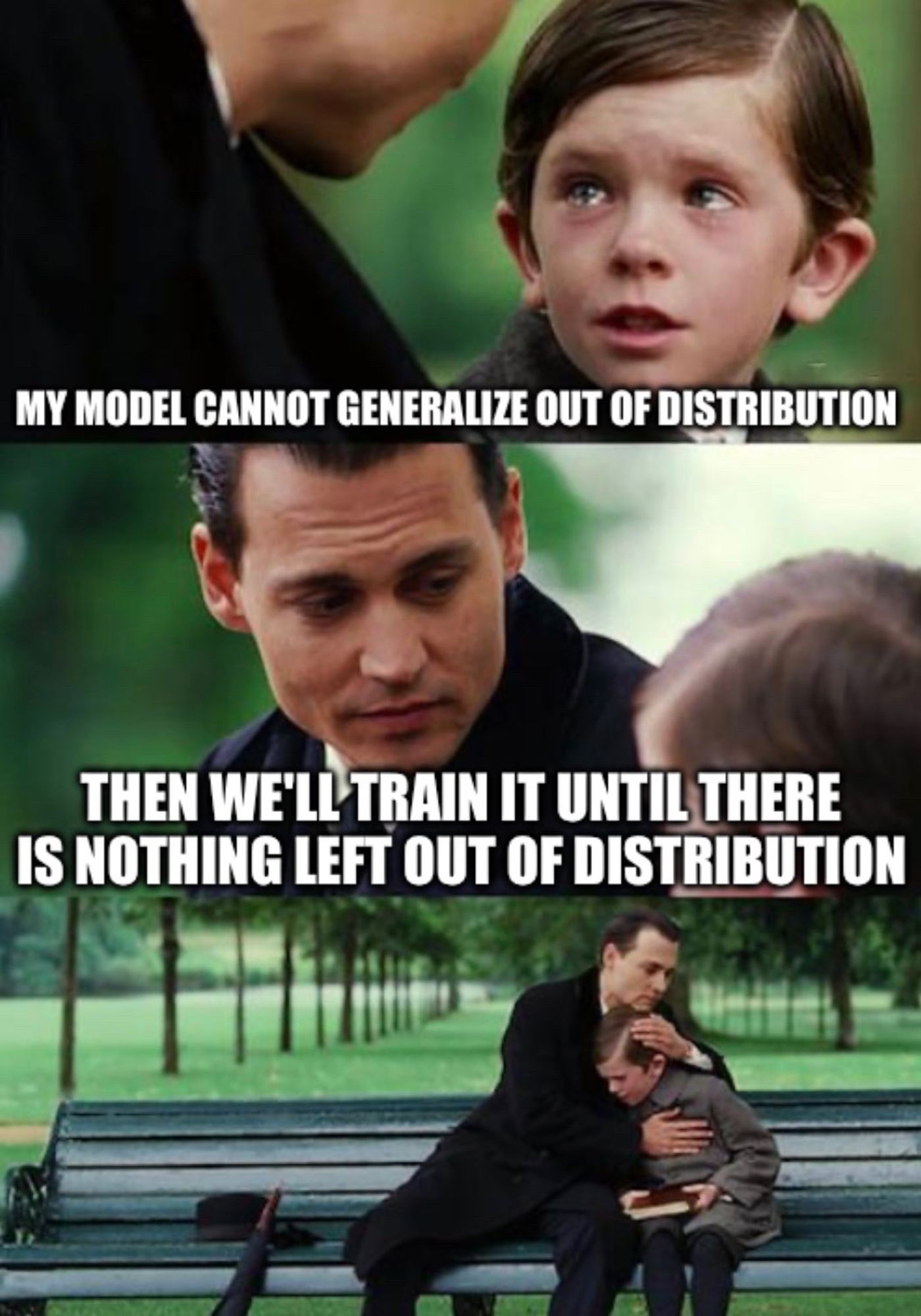

A propos of "Pretraining Data Mixtures Enable Narrow Model Selection Capabilities in Transformer Models," this meme has been making rounds in the EleutherAI Discord. arxiv.org/abs/2311.00871

It's really wild when people say stuff like "academia doesn't matter any more, only the big labs with the most money do." Recent inventions by non-profit researchers have brought massive improvements in large scale models: - Alibi - Scaled RoPE - Flash Attention - Parallel attention and MLP layers

This is your daily reminder that only three orgs have ever trained a LLM and released the model and full data: EleutherAI BigScience (non-OS license) and Together Computer. Small orgs like these make science possible in the face of industry power.