TK

Tal Korem

@tkorem.bsky.social

Microbiome, network inference, metabolism and reproductive health. All views are mine.

239 followers159 following51 posts

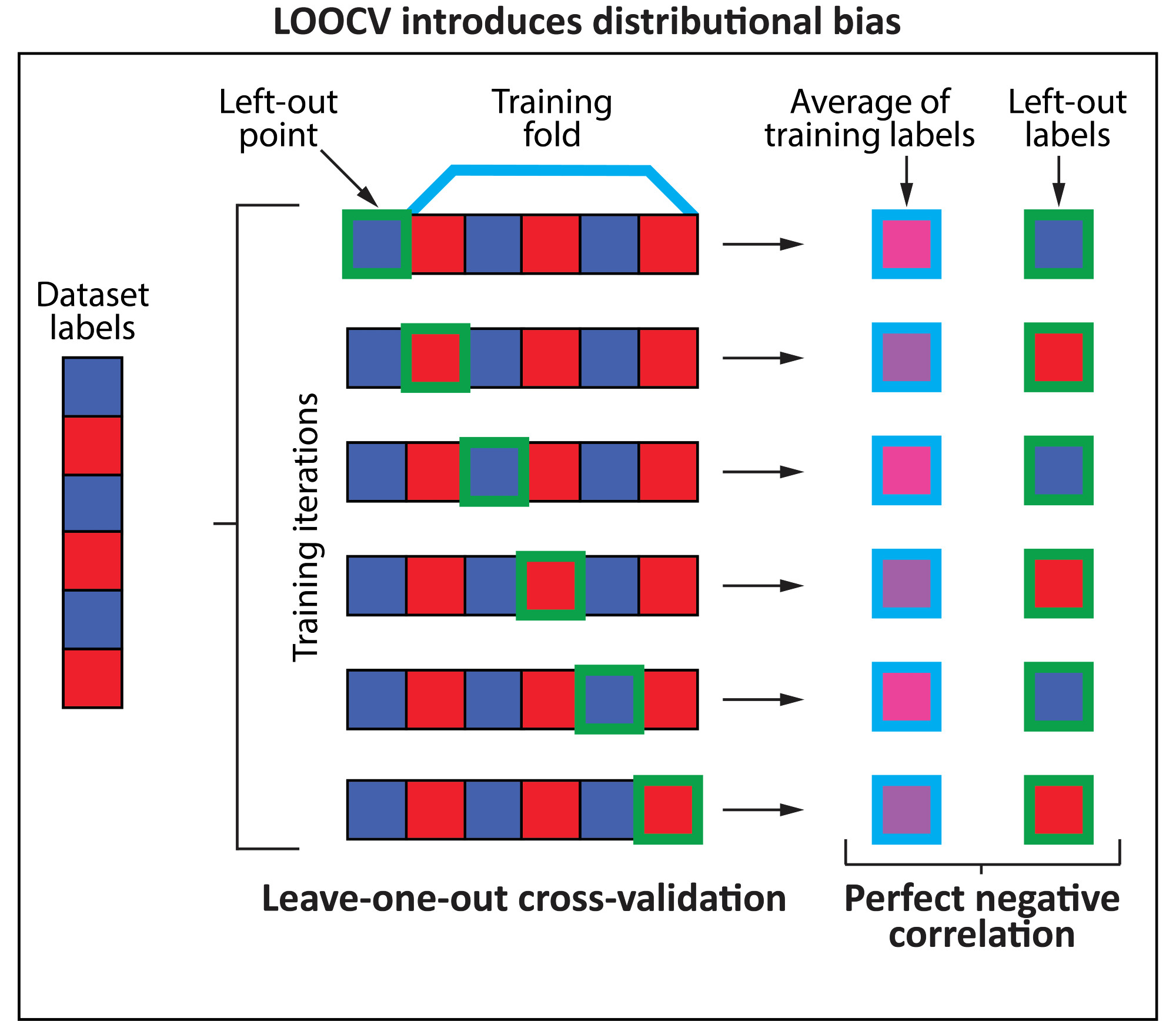

The issue is that every time one holds out a sample as a test set in LOOCV, the mean label average of the training set shifts slightly, creating a perfect negative correlation across the folds between that mean and the test labels. We call this phenomenon distributional bias:

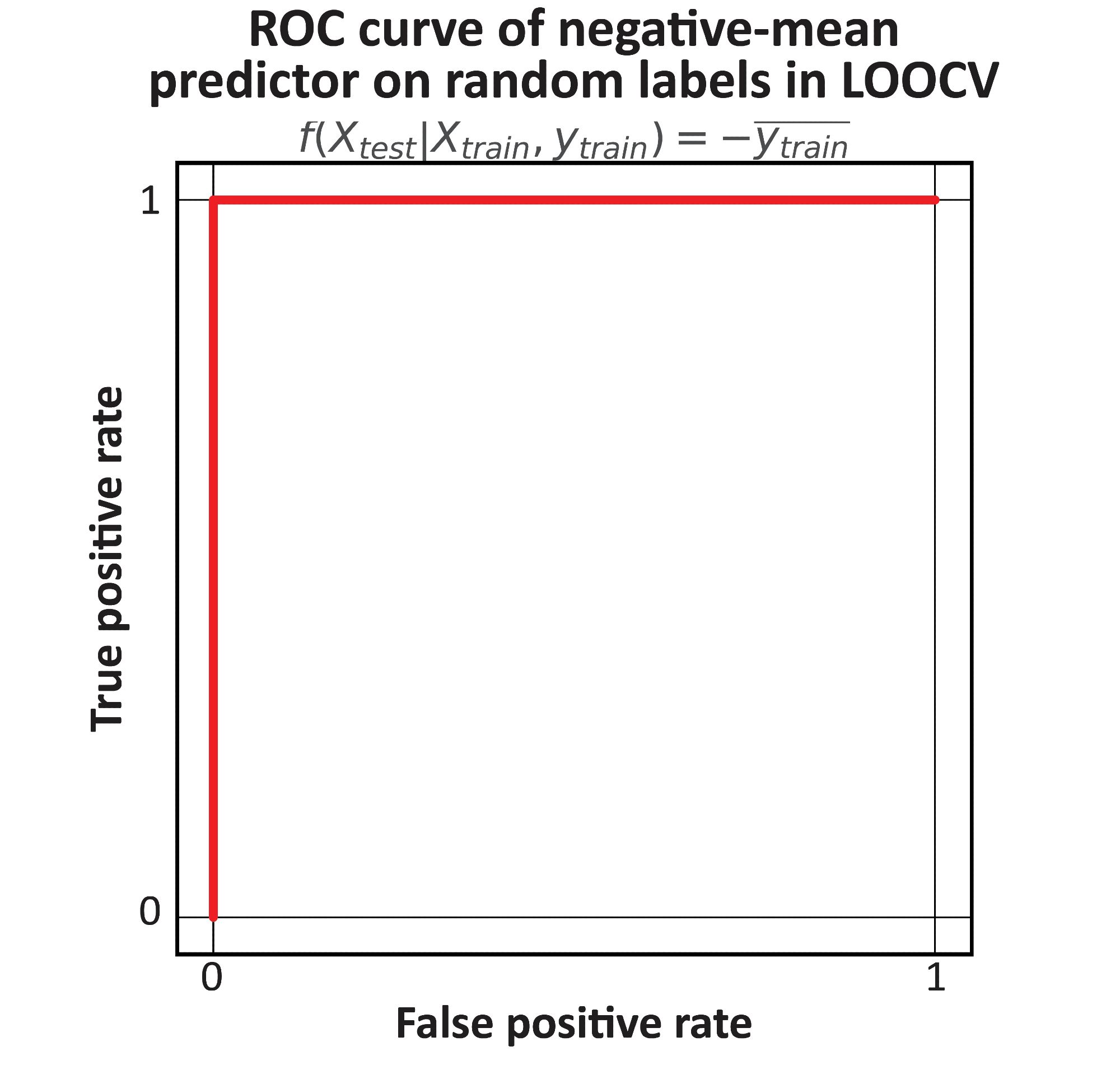

Distributional bias is a severe information leakage - so severe that we designed a dummy model that can achieve perfect auROC/auPR in ANY binary classification task evaluated via LOOCV (even without features). How? it just outputs the negative mean of the training set labels!

TK

Tal Korem

@tkorem.bsky.social

Microbiome, network inference, metabolism and reproductive health. All views are mine.

239 followers159 following51 posts