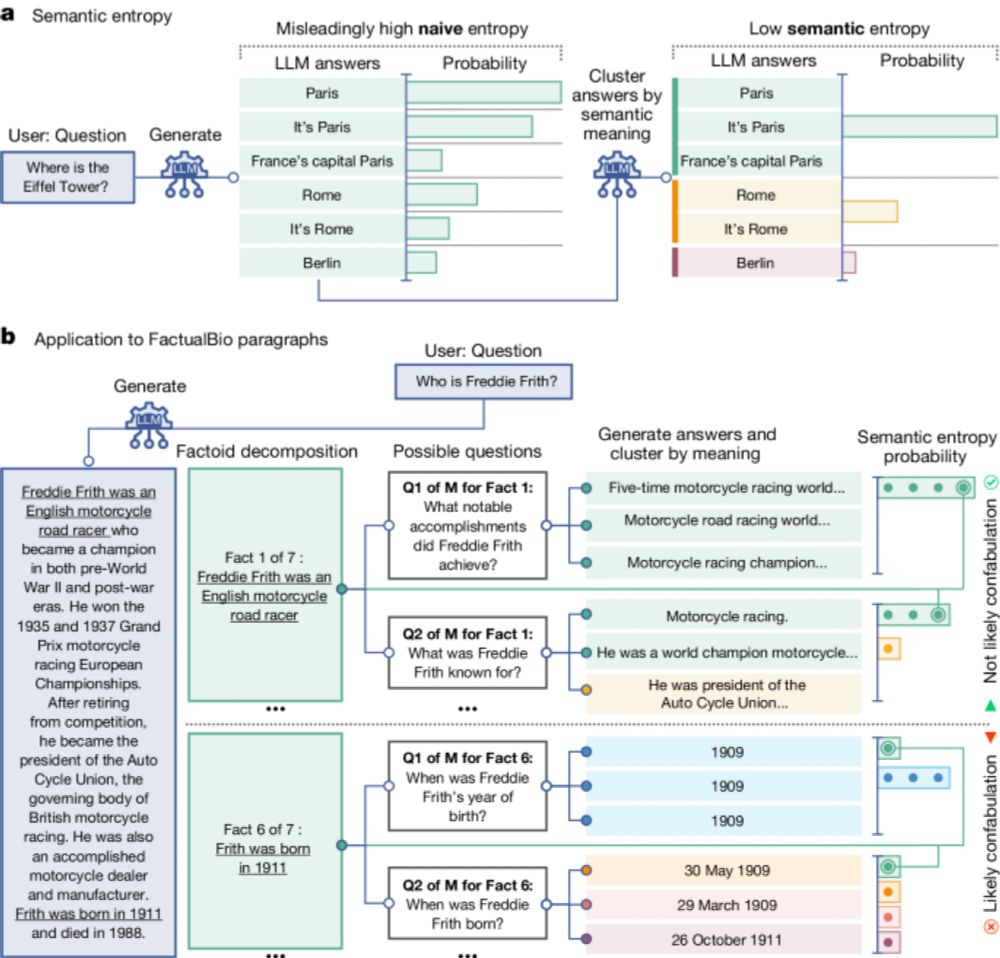

A cool new paper about detecting "hallucinations" (or "bullshitting") of LLMs. The idea is simple. Cluster potential answers based on the entailment, and then the entropy of the potential answers tells us how "unsure" it is, which is linked to confabulation. www.nature.com/articles/s41...

Detecting hallucinations in large language models using semantic entropy - Nature

Hallucinations (confabulations) in large language model systems can be tackled by measuring uncertainty about the meanings of generated responses rather than the text itself to improve question-a...