AH

Andrew Heiss 🍂🎃

@andrew.heiss.phd

Assistant professor at Georgia State University, formerly at BYU. 6 kids. Study NGOs, human rights, #PublicPolicy, #Nonprofits, #Dataviz, #CausalInference.

#rstats forever.

#LDSforHarris

andrewheiss.com

3.3k followers2k following1.9k posts

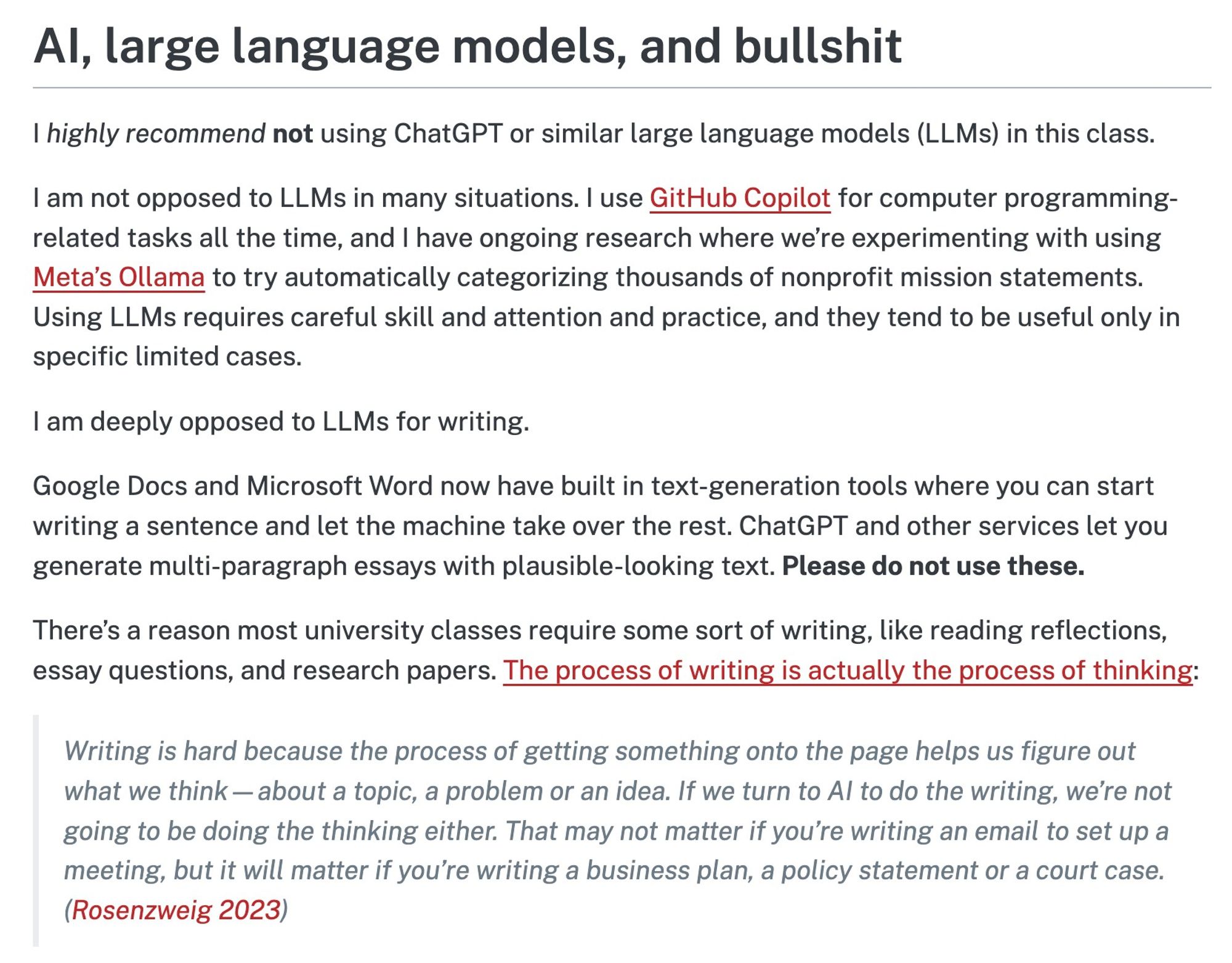

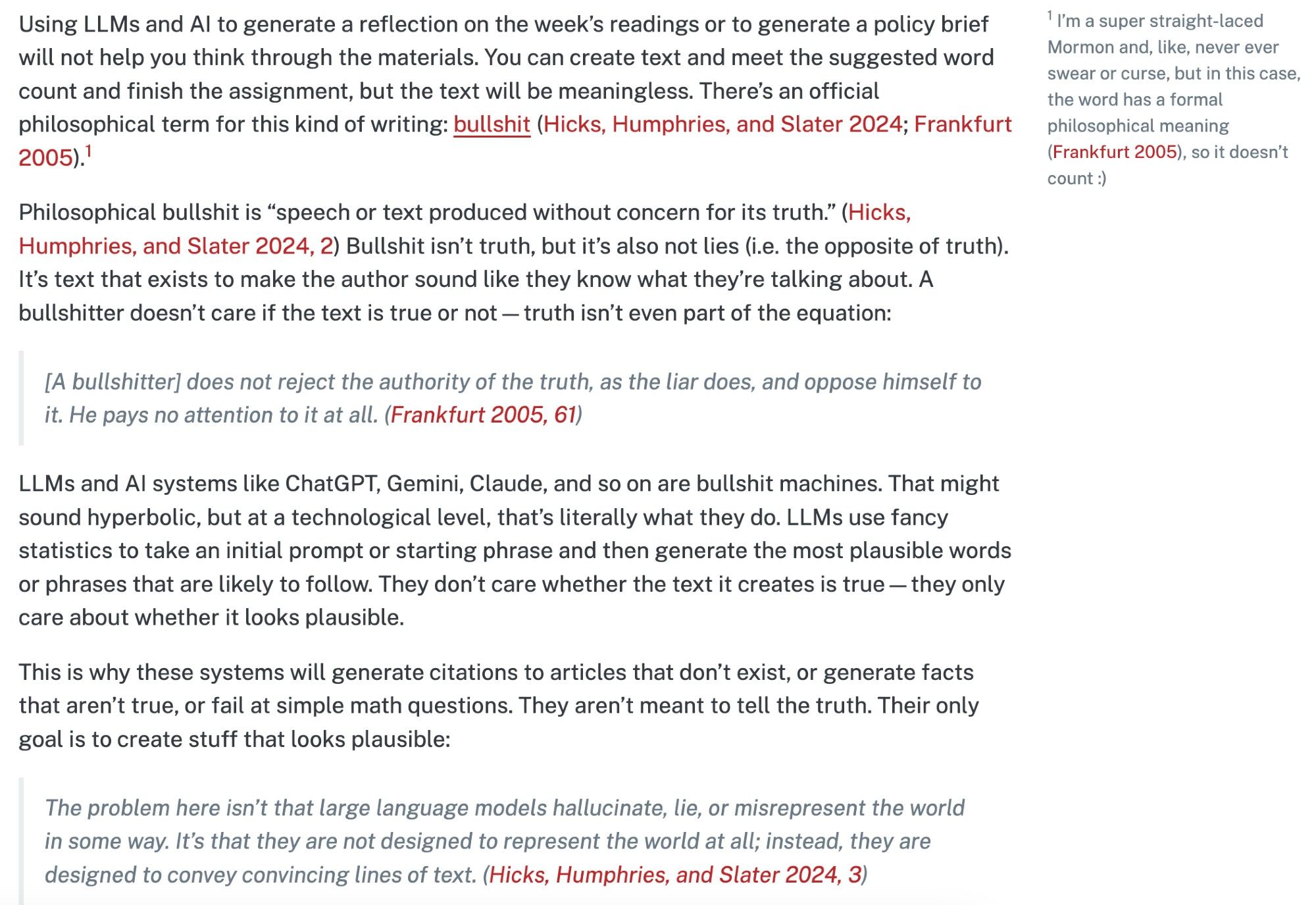

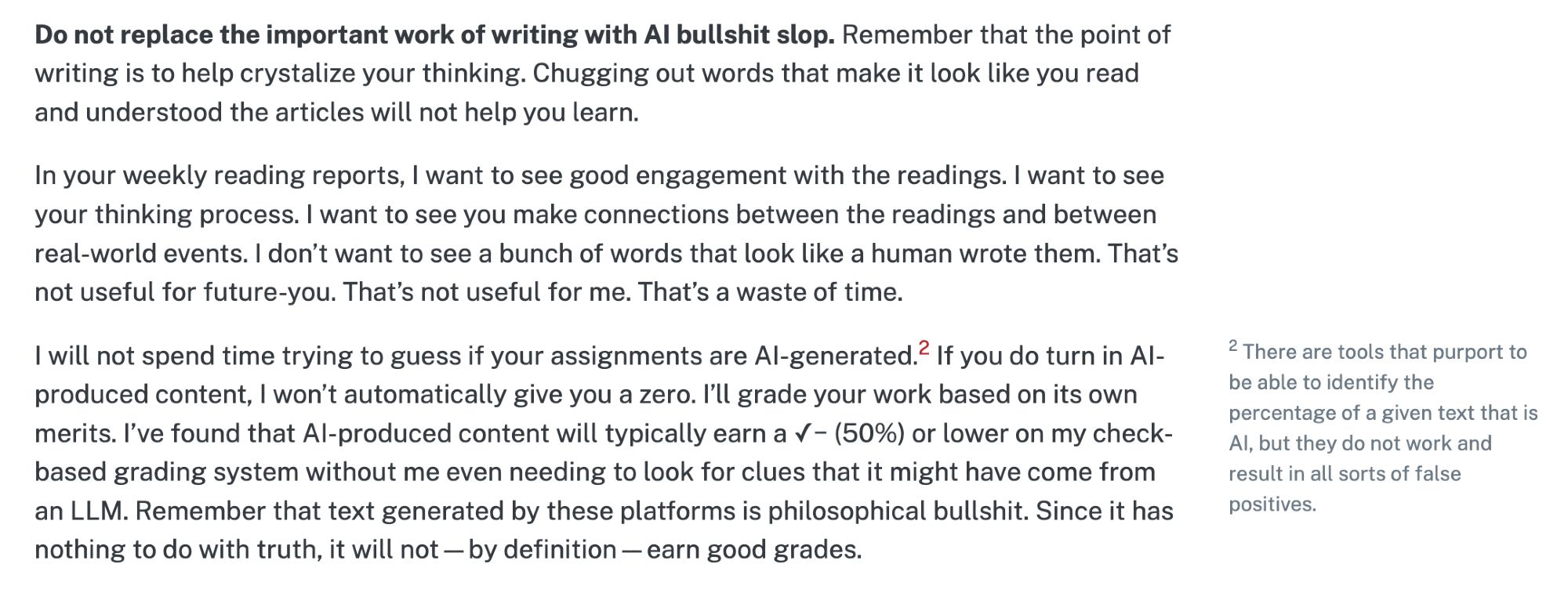

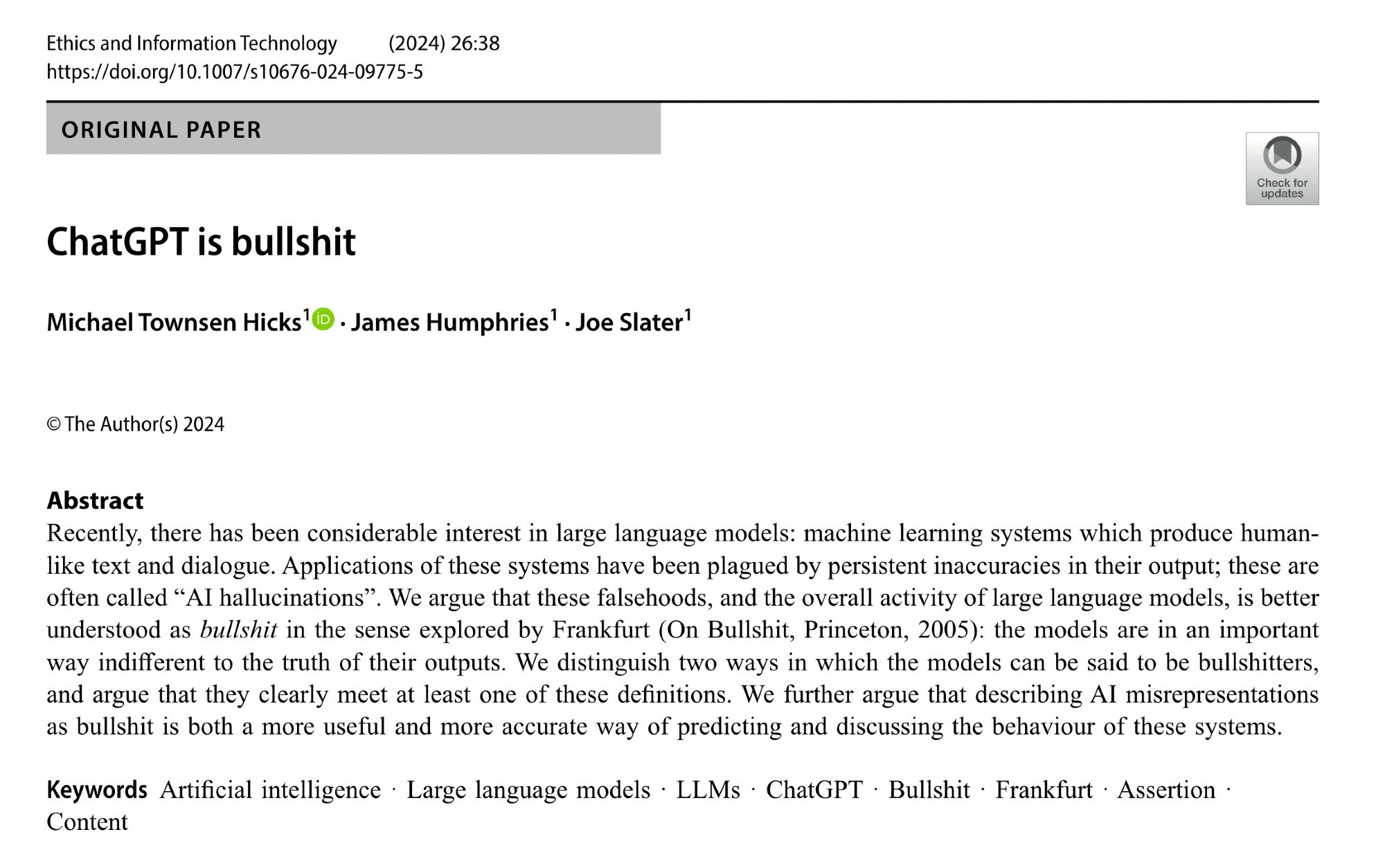

Finally created an official policy for AI/LLMs in class (compaf24.classes.andrewheiss.com/syllabus.htm...doi.org/10.1007/s106...

Greatly appreciate your sharing this!

Dear god FINALLY an honest A”I” policy

Beautiful. Hope you don’t mind if if I riff off this to replace my overly milquetoast current version. And thanks for the article link!

This is SO SO good. Thank you for sharing it.

this is great, thanks for sharing

This is the way!!

I would be so happy to see a professor give this kind of genuine, solid advice at the start of any course

AH

Andrew Heiss 🍂🎃

@andrew.heiss.phd

Assistant professor at Georgia State University, formerly at BYU. 6 kids. Study NGOs, human rights, #PublicPolicy, #Nonprofits, #Dataviz, #CausalInference.

#rstats forever.

#LDSforHarris

andrewheiss.com

3.3k followers2k following1.9k posts