Further reminder that John Roberts is by far the most damaging figure in American politics.

and in the process, only big tech and AI vendors profit

'In some cases, “institutional plagiarism checkers seem to be playing both sides of the market”, with some large edtech firms providing both a “premium AI…to rephrase AI generated or normally plagiarised work so that it can avoid detection” and a plagiarism detector.' The squalor that is Edtech.

Essay mills pivoting to offering low-cost services to avoid plagiarism checks

Continuing to try to spread this information. "To opt out, log into your LinkedIn account, tap or click on your headshot, and open the settings. Then, select 'Data privacy,' and turn off the option under 'Data for generative AI improvement'.”

The professional network now by default grants itself permission to use anything you post to train its artificial intelligence

As part of a test run for Australia's corporate regulator, AI was used to summarise submissions made by the public. The trial found that AI performed worse in every metric compared with humans. Assessors suggested AI would make more work for people, not less. www.crikey.com.au/20...

A test of AI for Australia's corporate regulator found that the technology might actually make more work for people, not less.

"Researchers at the University of Pennsylvania found that Turkish high school students who had access to ChatGPT while doing practice math problems did worse on a math test compared with students who didn’t have access to ChatGPT." hechingerreport.org/kids-chatgpt...

Researchers compare math progress of almost 1,000 high school students

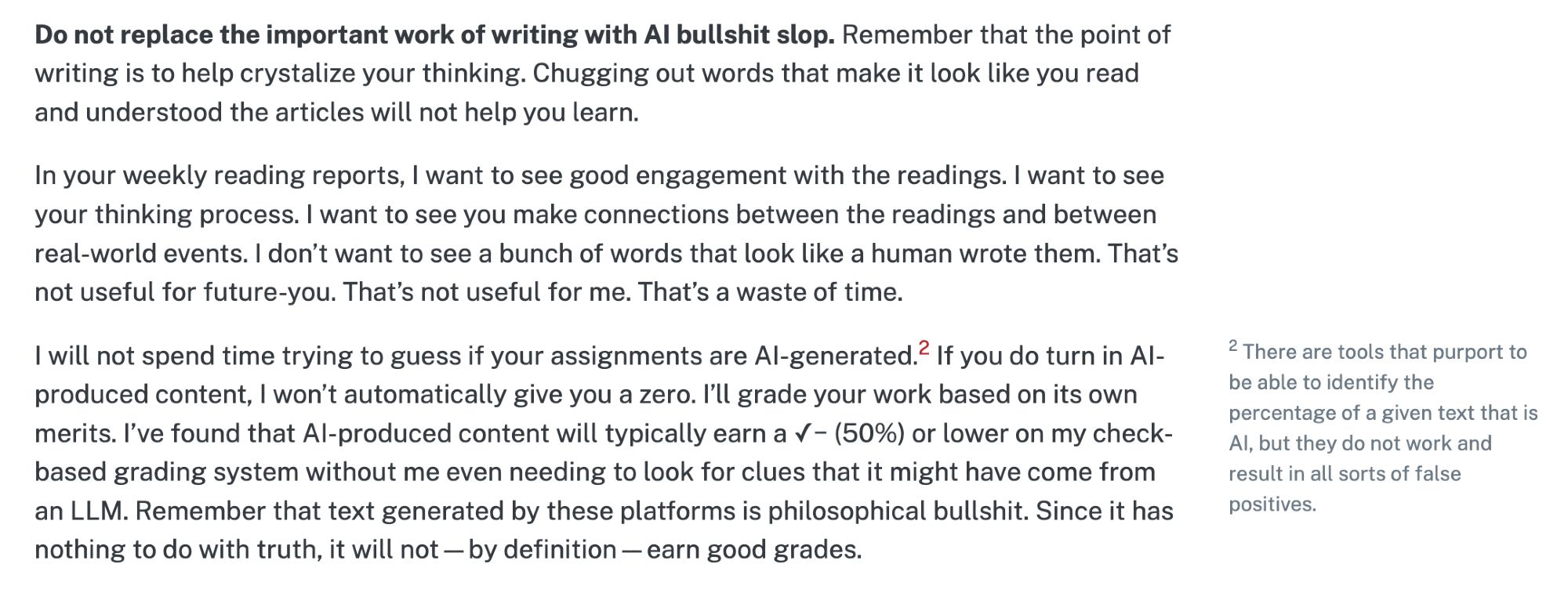

Finally created an official policy for AI/LLMs in class (compaf24.classes.andrewheiss.com/syllabus.htm...doi.org/10.1007/s106...

“People generally substantially overestimate what the technology is capable of today. In our experience, even basic summarization tasks often yield illegible and nonsensical results. " must read www.404media.co/goldman-sach...

One of the world's largest investment banks wonders if generative AI will be worth the huge investment and hype: "will this large spend ever pay off?"