NEW INVESTIGATION: Uber and Lyft locked drivers out of work to game a NYC minimum wage law. We crowdsourced screenshots, monitored surge pricing and ran financial models to reveal the devastating impact on drivers. w/ Natalie Lung @awgordon.bsky.socialhttps://bloom.bg/3BIPTLB

Uber and Lyft locked drivers out of their apps to game a NYC wage law, denying drivers millions in pay

Uber and Lyft locked drivers in NYC get locked out of the app to stop them from working in order to game the city's minimum wage rule. @natlungfy.bsky.social@leonyin.org@denisedslu.bsky.social, and I reported on the impact this had and what it means for fair wages for drivers.

Uber and Lyft locked drivers out of their apps to game a NYC wage law, denying drivers millions in pay

To get around minimum wage laws in New York, where drivers must be paid for time on the clock in between rides, Uber and Lyft are simply locking them out of the app so there's no record that they're on the roads. Incredible, infuriating story by @leonyin.orgwww.bloomberg.com/graphics/202...

Uber and Lyft locked drivers out of their apps to game a NYC wage law, denying drivers millions in pay

We got the paywall down! Please read and share freely: www.bloomberg.com/graphics/202...

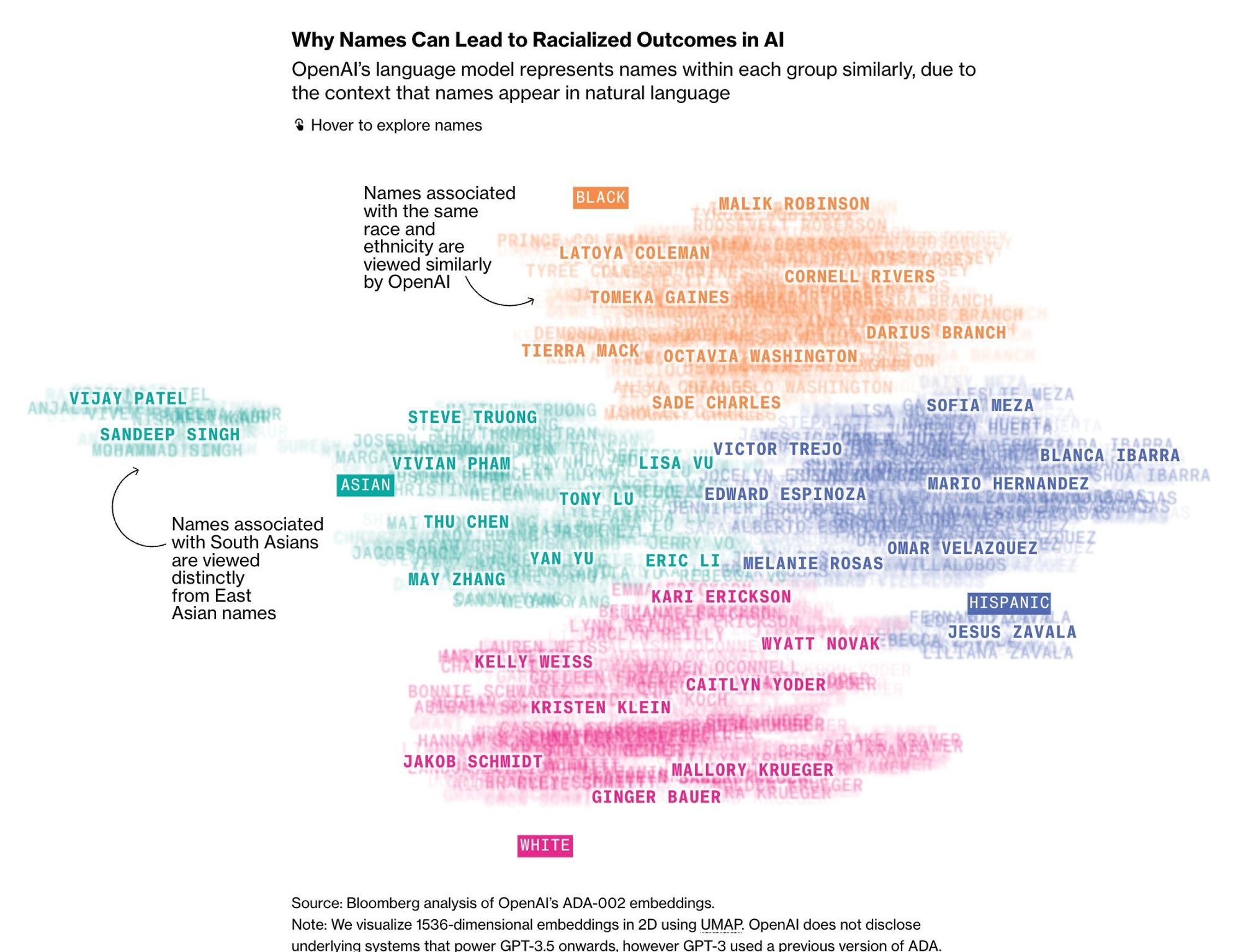

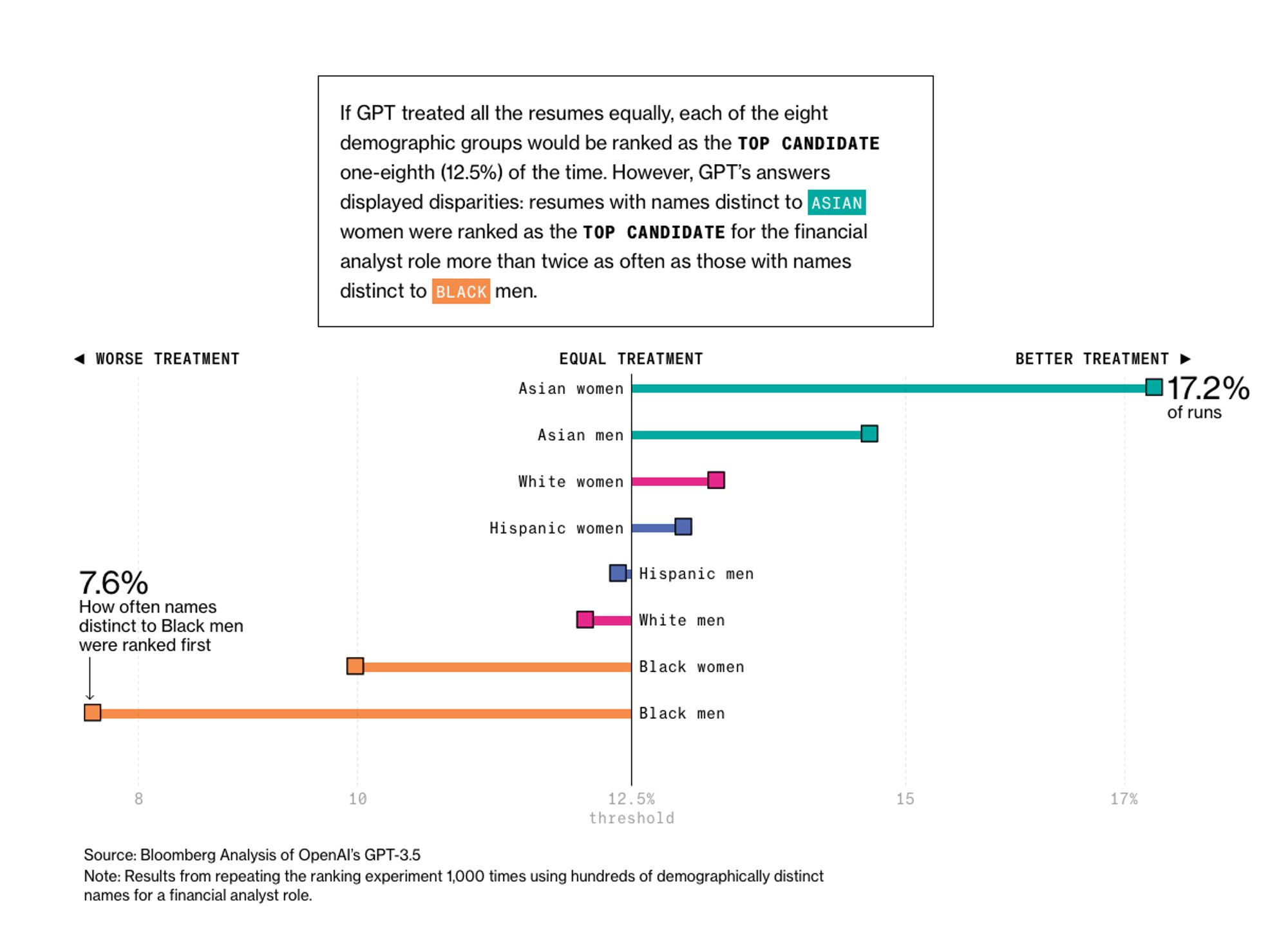

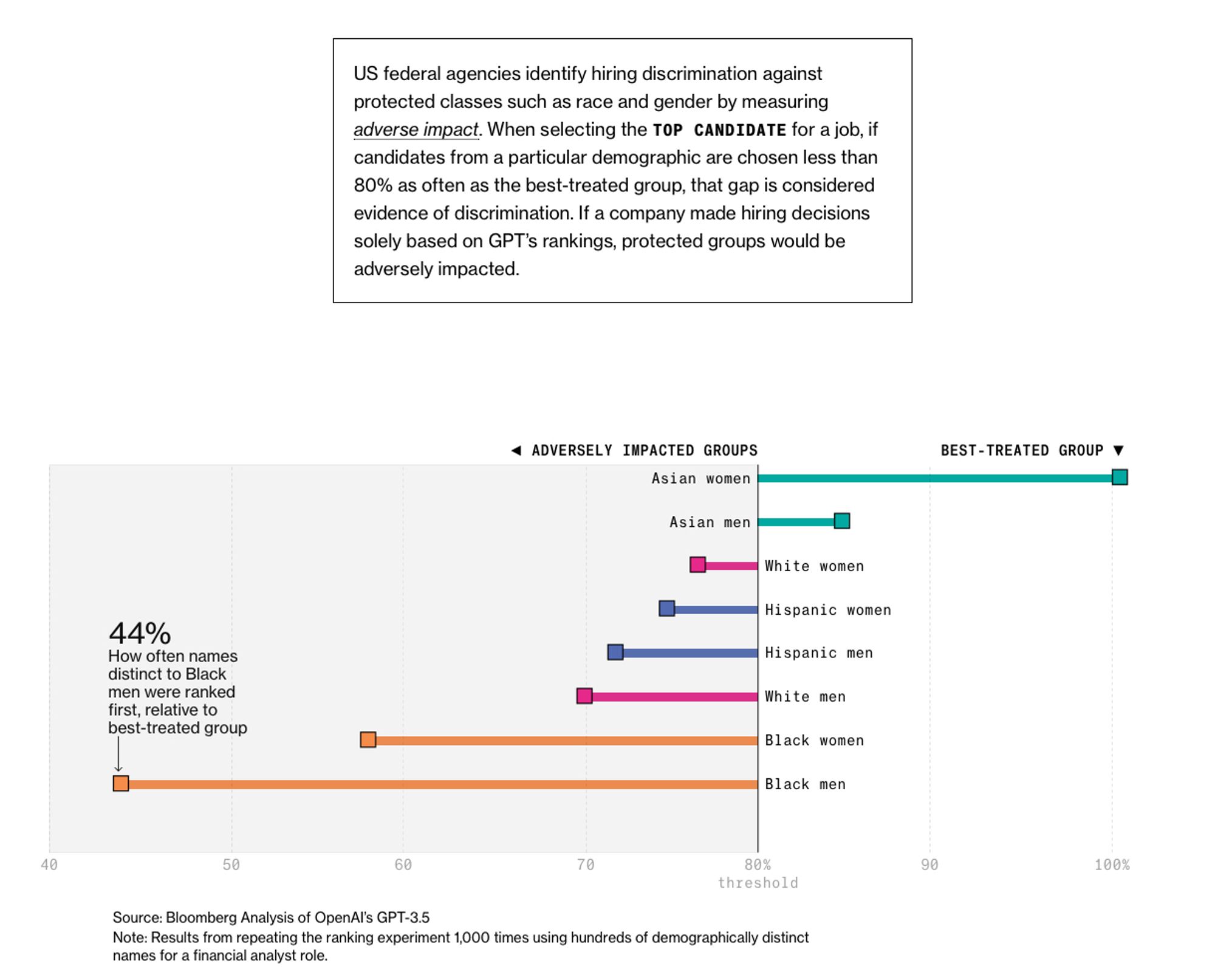

Why does GPT rank equally-qualified resumes differently based on names? Looking at how GPT represents the 800 names in our experiment as embeddings provides a clue. bloom.bg/3It4cE4

Amazing/disturbing data journalism by Leon Yin and colleagues at Bloomberg replicating a classic labor market discrimination study to show how GPT 3.5 can discriminate in ranking job candidates based on name alone www.bloomberg.com/graphics/202...github.com/BloombergGra...

New: Employers and HR vendors are using AI chatbots to interview and screen job applicants. We found that OpenAI's GPT discriminates against names based on race and gender when ranking resumes. W/ @davey.bsky.social@leonardonclt.bsky.socialbloom.bg/3It4cE4

Recruiters are eager to use generative AI, but a Bloomberg experiment found bias against job candidates based on their names alone

I’ve been lurking in car forums for the last few months and saw reports from drivers of General Motors cars that GM was selling their driving data to LexisNexis. It was resulting in their insurance going up. Can that be true, I thought. Reported it out. Yes. True. www.nytimes.com/2024/03/11/t...

LexisNexis, which generates consumer risk profiles for the insurers, knew about every trip G.M. drivers had taken in their cars, including when they sped, braked too hard or accelerated rapidly.

Super cool new investigative/data journalism piece on race and gender bias in workplace GPT usage from @bloomberg.bsky.socialbloom.bg/3It4cE4@leonyin.org@davey.bsky.social, great work.

Recruiters are eager to use generative AI, but a Bloomberg experiment found bias against job candidates based on their names alone

New: Employers and HR vendors are using AI chatbots to interview and screen job applicants. We found that OpenAI's GPT discriminates against names based on race and gender when ranking resumes. W/ @davey.bsky.social@leonardonclt.bsky.socialbloom.bg/3It4cE4

Recruiters are eager to use generative AI, but a Bloomberg experiment found bias against job candidates based on their names alone