Also on Thursday, Moufan Li will present: [Talk+Poster B162] Modeling Multiplicity of Strategies in Free Recall with Neural Networks 2024.ccneuro.org/poster/?id=528@marcelomattar.bsky.social@kristorpjensen.bsky.social @QiongZhang! (2/2)

Looking forward to #CCN20242024.ccneuro.org/poster/?id=122@ptoncompmemlab.bsky.social @DaphnaShohamy for supporting this project! (1/2)

Please retweet! I am excited to share that I am looking for motivated postdocs who want to come and work with me at NYU (please apply here: apply.interfolio.com/145544) (1/3)

I would like to thank Tyler Giallanza, Declan Campbell, Cody Dong, Jon Cohen, and anonymous reviewers for their insightful and constructive feedback!

This project was accepted as a talk by CogSci2024 and CEMS2024! I want to thank my collaborator, Ali Hummos, and my advisor, Ken Norman @ptoncompmemlab.bsky.social Feel free to reach out with any comments/questions/suggestions!

… Interestingly, EM errors (retrieving a similar task instead of the correct one) also helped the model learn the ring, as they cause representations for similar tasks to be used in overlapping sets of scenarios, making them more similar.

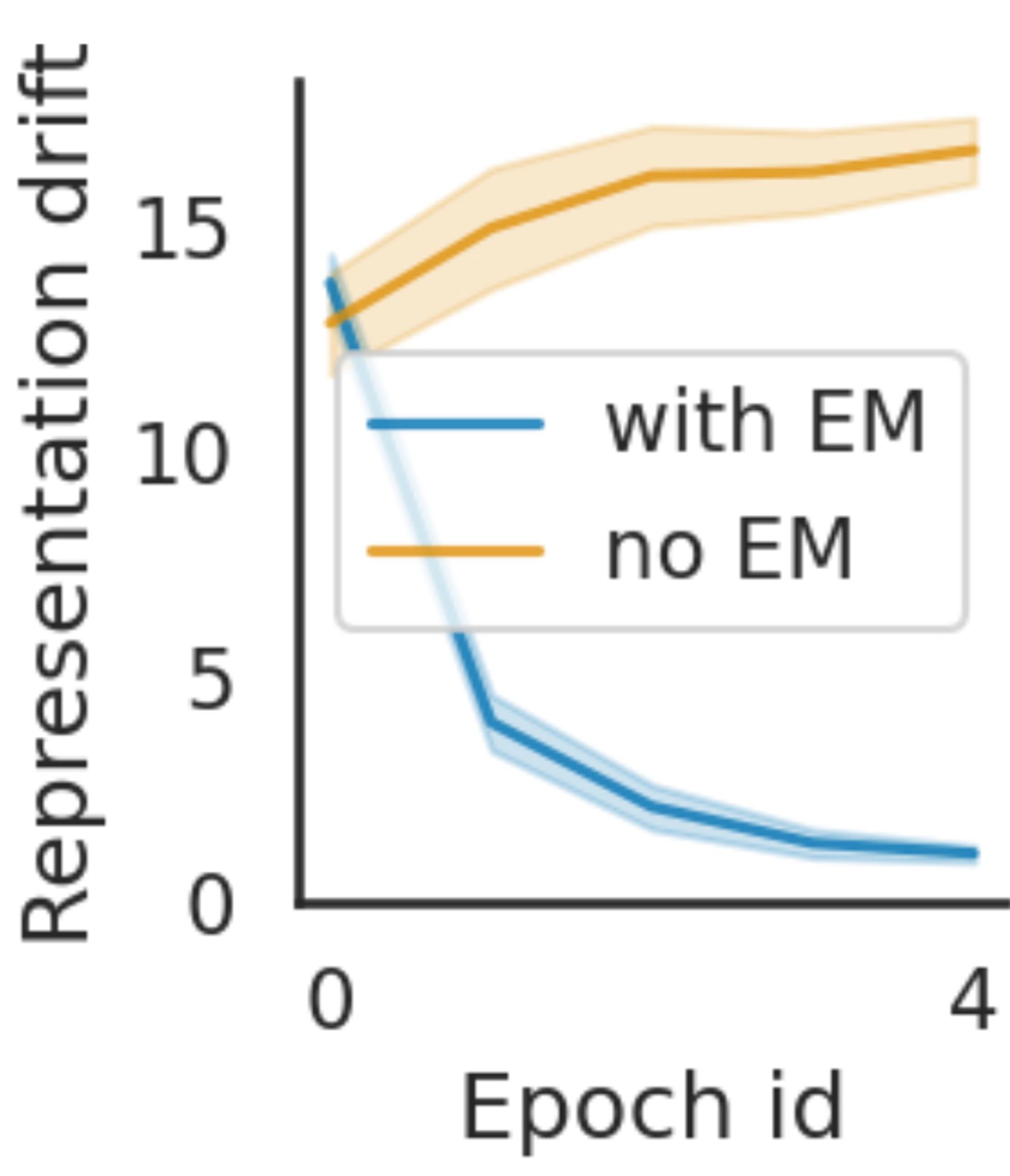

In simulation 2, the model had to learn 12 classification tasks that varied along a ring manifold. Models with EM learned the ring. Again, models without EM suffered from excessive representation drift, which hindered learning.

… this is because encoding and retrieving TRs in EM can reduce representation drift, which facilitates learning. Without EM, “good representation” for the ongoing task is often non-unique, leading to unnecessary TR drift – change in TRs after performance converges.

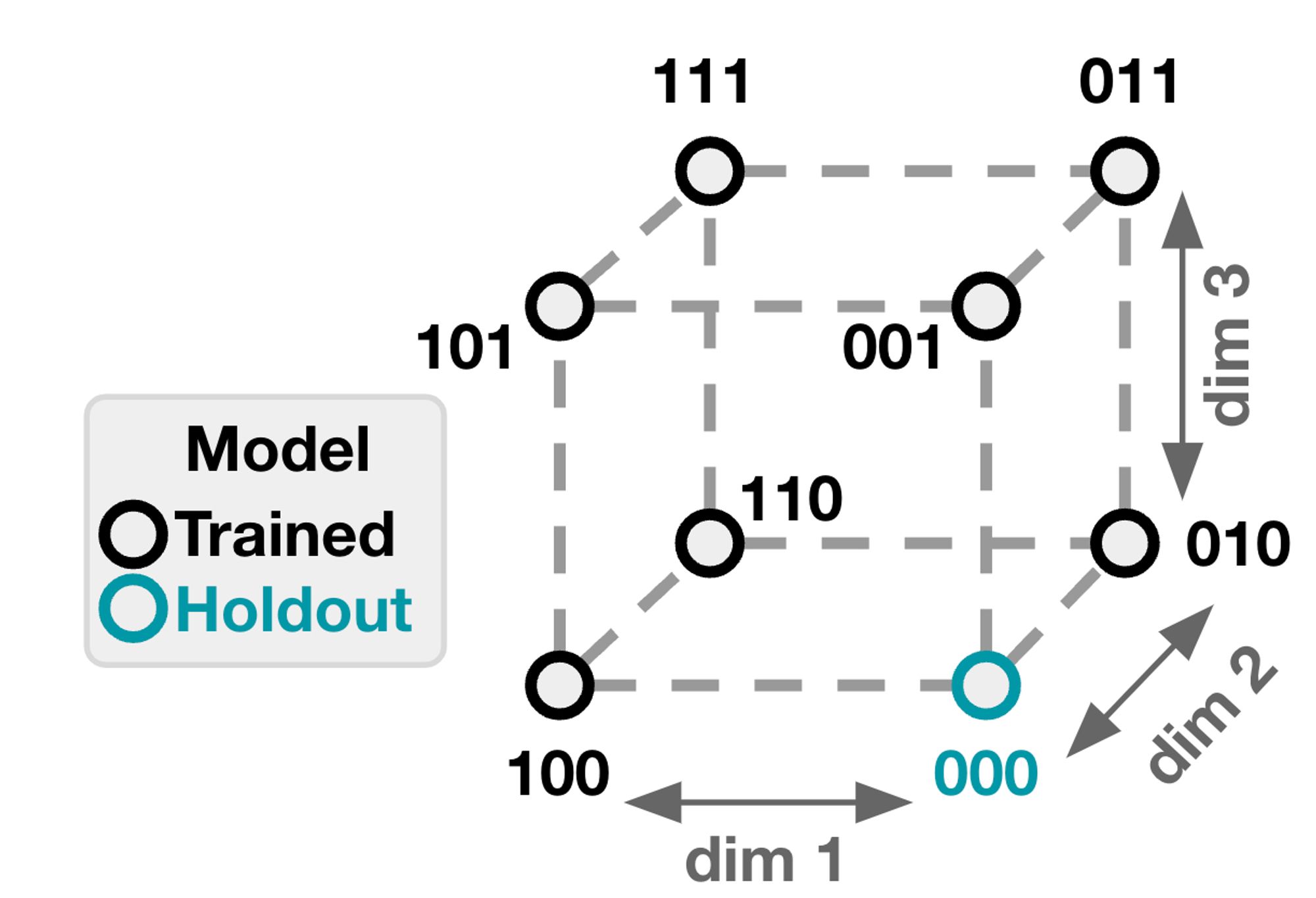

In simulation 1, the model had to learn 8 sequence prediction tasks that varied along 3 independent feature dimensions. Models with EM learned to represent the 3 dims in abstract format (Bernardi 2020)...

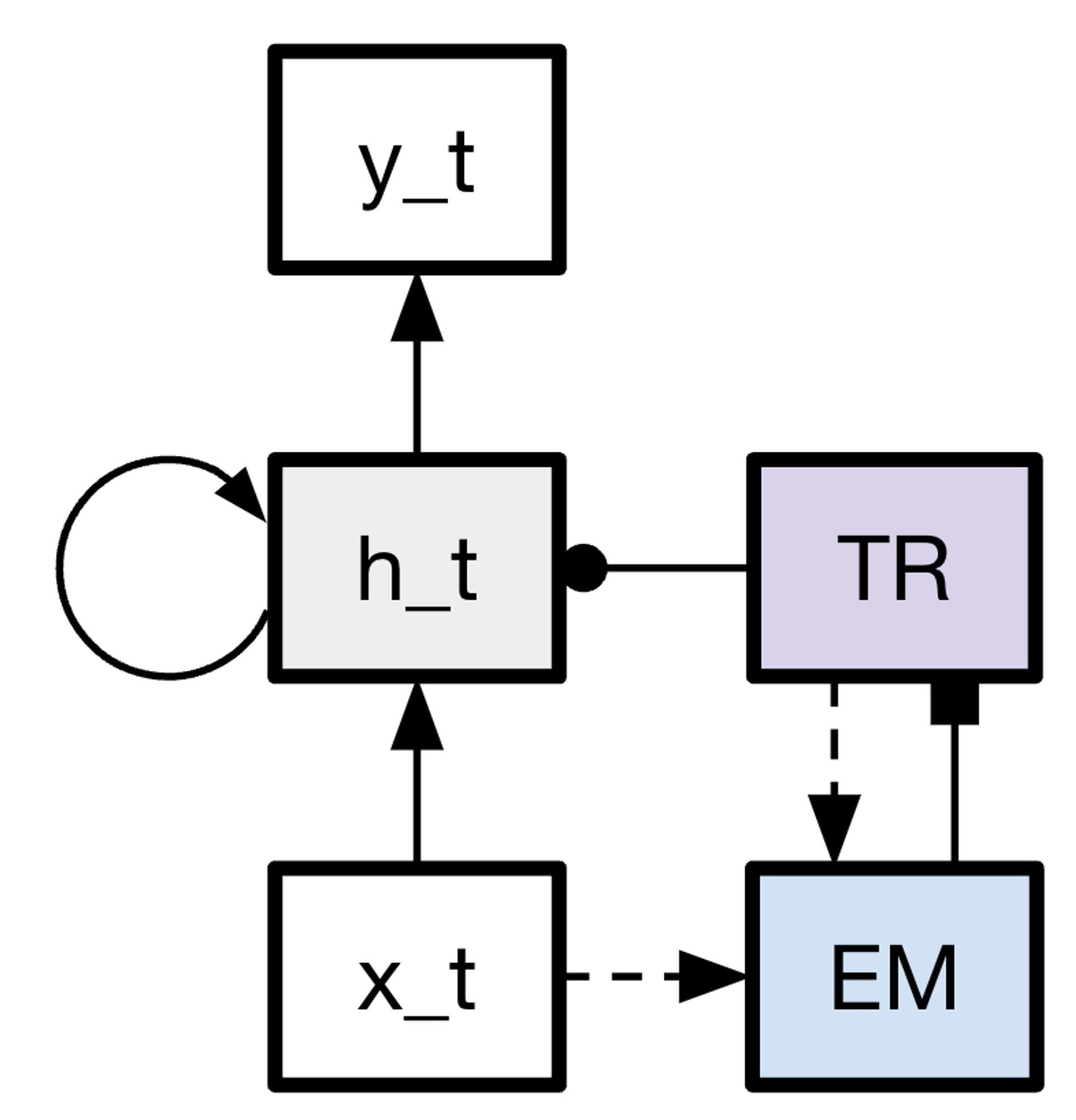

We developed an NN model augmented with a task representation (TR) layer and an episodic memory (EM) buffer. It uses EM to retrieve and encode TRs, so when it searches for a TR online (Giallanza 2023; Hummos 2022), it can retrieve previously used TRs from EM. 1/n