I’m pleased to announce our preprint 😃 – Toward a More Biologically Plausible Neural Network Model of Latent Cause Inference. URL: arxiv.org/abs/2312.08519 (1/N)

Let us know if you have any feedback/comments! I want to thank all my amazing co-authors/advisors: @ptoncompmemlab.bsky.social@gershbrain.bsky.social@cocoscilab.bsky.social Tan Nguyen, Qihong Zhang, Uri Hasson & Jeff Zacks

We view this work as a useful step towards a unified computational modeling framework for event cognition, and we think it is highly relevant to current discussions on how to enable machine learning models to do human-level continual learning (Kudithipudi et al., 2022). (15/N)

Overall, these proof-of-concept simulations demonstrate that the SEM framework can be made much more biologically plausible and overcome the limitation of being unable to extract shared structure across contexts... (14/N)

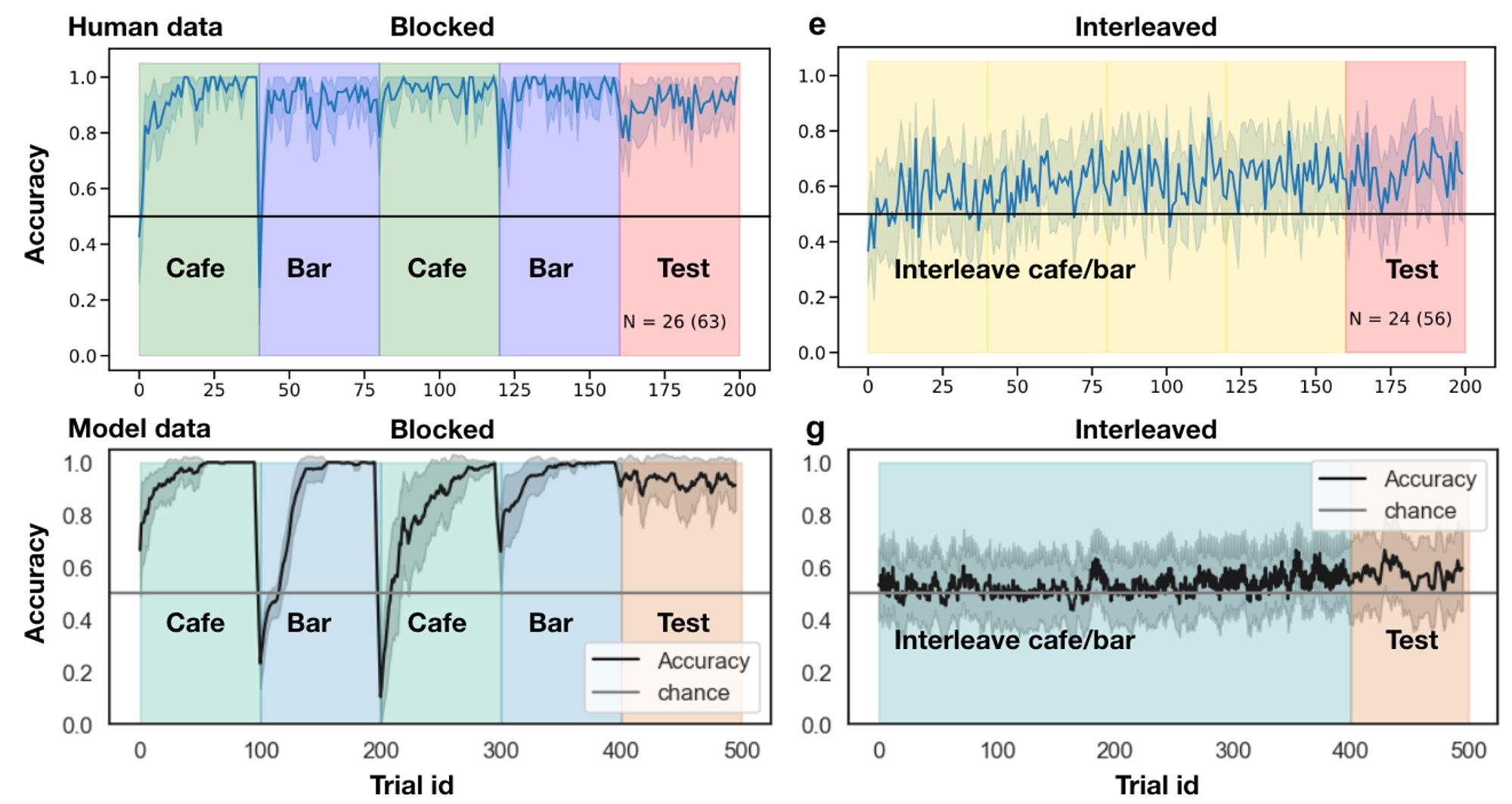

... These results suggest that the way humans segment events might emerge from the need to predict upcoming events. (13/N)

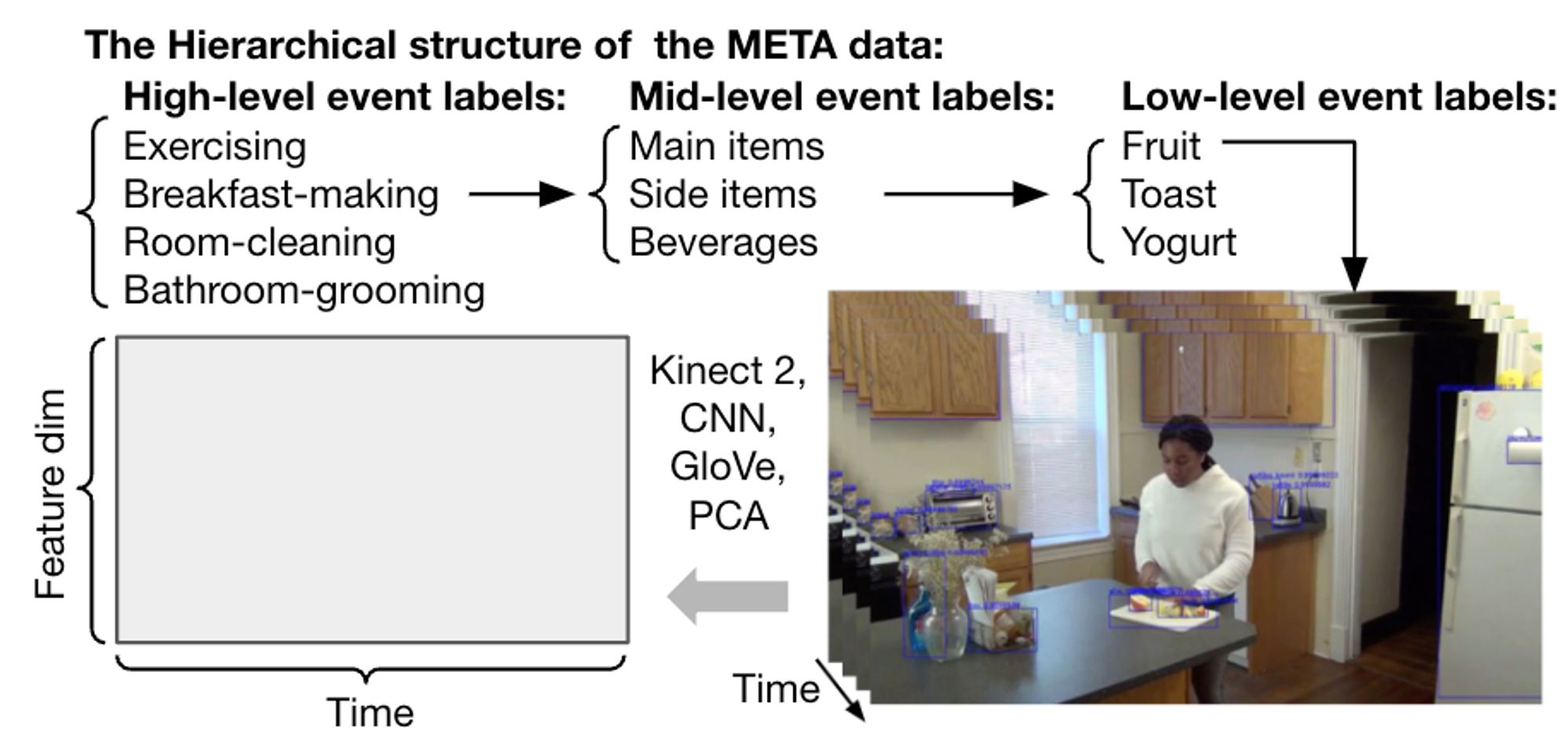

The latent causes inferred by LCNet captured the ground truth event structure, and the inferred event boundaries were correlated with human judgments, as was previously found using SEM2.0, a variant of SEM modified to account for this dataset (Bezdek et al., 2022b). (12/N)

Finally, we used LCNet to process naturalistic video data of daily events (Bezdek et al., 2022a). LCNet was trained to predict “what would happen next” (i.e., the next frame of the video). (11/N)

For related accounts of these findings, see Beukers et al., 2023 and Giallanza et al., 2023. (10/N)

... LCNet successfully explained this data using the assumption that humans have a prior belief that the latent causes of the environment are temporally autocorrelated; this belief is more consistent with the blocked training curriculum, which facilitates learning. (9/N)

In Simulation 2, LCNet was able to account for data showing that humans were better at learning event schemas in a blocked training curriculum compared to an interleaved curriculum (Beukers et al., 2023), a pattern that standard neural network models can't explain. (8/N)

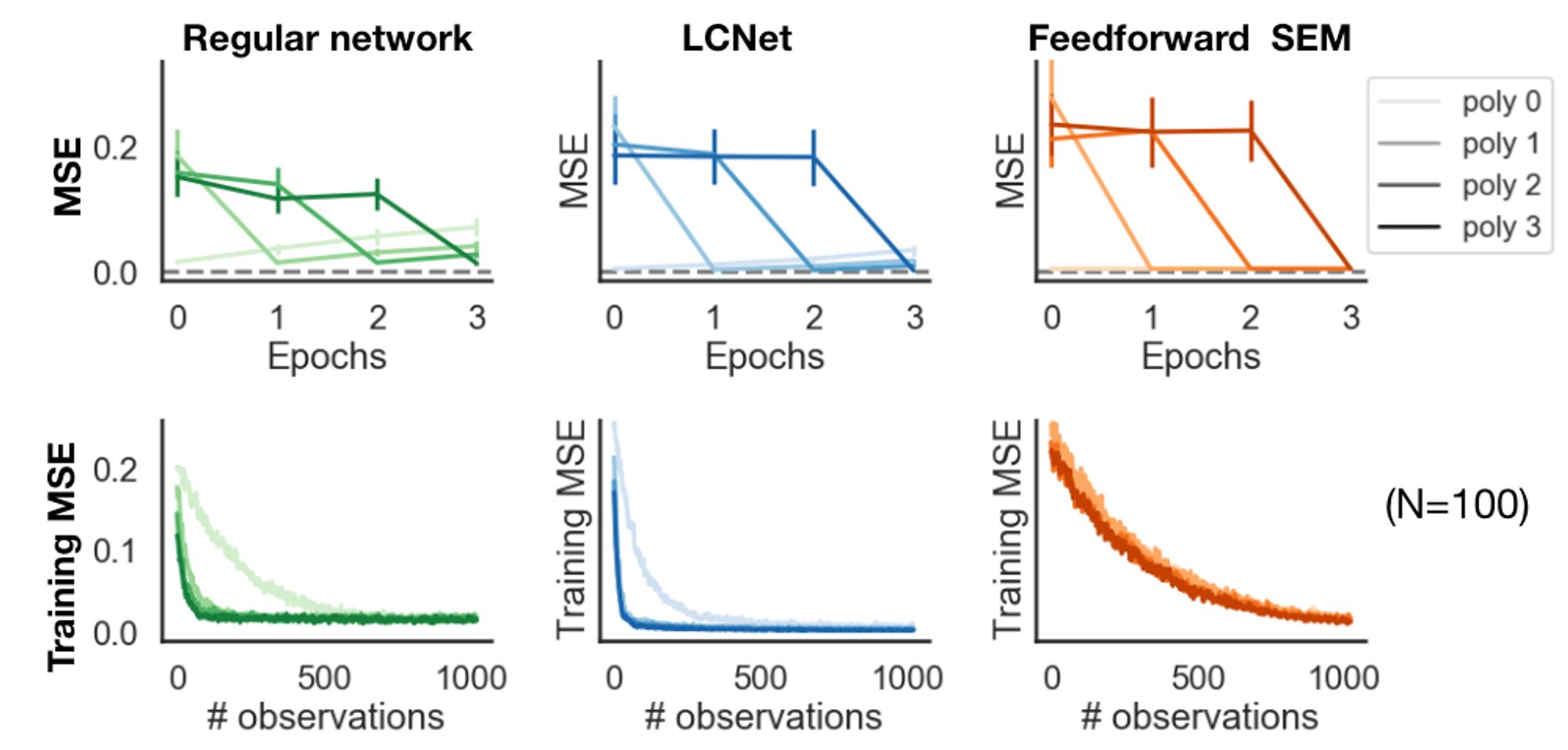

In simulation 1, we found that LCNet was able to extract and reuse the shared structure across contexts (e.g., learning a new related task was faster) and overcome catastrophic interference. (7/N)