The hippocampus doesn't just focus on associative relations but also premises memory on reward signals. This implies that a "learning" terminal reward is some kind of signal to the hippocampus, and I would imagine it's to mark where in-context learning happened. pubmed.ncbi.nlm.nih.gov/21851992/

According to the Hebb rule, the change in the strength of a synapse depends only on the local interaction of presynaptic and postsynaptic events. Studies at many types of synapses indicate that the ea...

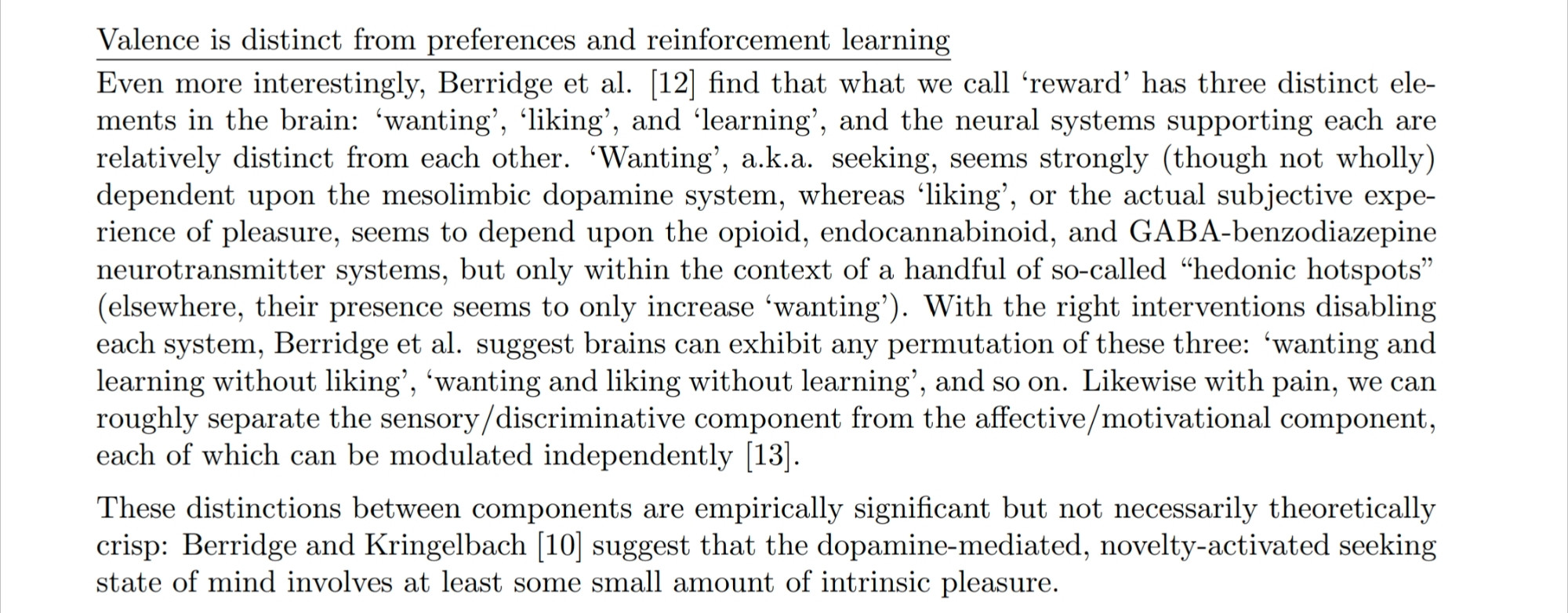

That is, why have a "learning" reward type separate from wanting and liking? If the purpose of these terminal rewards is to tag memories for inclusion in the hippocampus then it would make sense to have a specific reward signal for when you manage to locally figure out a pattern so it can be stored.

I don't have any mechanistic evidence but I will note that the human brain having a "learning" terminal reward makes a lot more sense in the context of hippocampal reward gating if it's there to fish out in-context learned patterns from daily experience. bsky.app/profile/jdp....

It's amazing to me that Firefox has lasted as long as it did. Mozilla has always been one of the good guys and it'll be sad watching them go when the Google money stops coming in.

I think these visualizations are referenced/reproduced in the book Silence On The Wire by Michel Zalewski.

No I suspect humans bootstrap text from understanding other modalities. There's a reason we teach children with picture books.

For what it's worth a great deal of why LLMs confabulate is that they don't have robust memories. It's not that they "predict the next token", that's basically an IQ test (e.g. Raven's Progressive Matrices) and any cognitive process can be framed that way. It's that they basically have dementia.

I would just like to note that I explicitly say part of the solution has to involve how the feeds are designed. bsky.app/profile/jdp....

Studies find that rage is the most viral emotion and this is basically a zero day exploit in human psychology. Nobody knows what to do about this! It means to fix feeds we basically have to go out of our way not to give people what they "want". www.nbcnews.com/technolog/yo...

What I found particularly interesting in that article was how it explicitly enumerates the different kinds of play children can engage in. It seems that a playground for AI agents would also want to be designed around an explicit list of possible affordances for different things the agent can do.